Introduction

Welcome to Tari Labs University (TLU). Our mission is to be the premier destination for balanced and accessible learning material for blockchain, digital currency and digital assets learning material.

We hope to make this a learning experience for us at TLU: as a means to grow our knowledge base and internal expertise or as a refresher. We think this will also be an excellent resource for anyone interested in the myriad disciplines required to understand blockchain technology.

We would like this platform to be a place of learning accessible to anyone, irrespective of their degree of expertise. Our aim is to cover a wide range of topics that are relevant to the TLU space, starting at a beginner level and extending down a path of deeper complexity.

You are welcome to contribute to our online content by submitting a pull request or issue in GitHub. To help you get started, we've compiled a Style Guide for TLU reports. Using this Style Guide, you can help us to ensure consistency in the content and layout of TLU reports.

Errors, Comments and Contributions

We would like this collection of educational presentations and videos to be a collaborative affair. This extends to our presentations. We are learning along with you. Our content may not be perfect first time around, so we invite you to alert us to errors and issues or, better yet, if you know how to make a pull request, to contribute a fix, write the correction and make a pull request.

As much as this learning platform is called Tari Labs University and will see input from many internal contributors and external experts, we would like you to contribute to new material, be it in the form of a suggestion of topics, varying the skill levels of presentations, or posting presentations that you may feel will benefit us as a growing community. In the words of Yoda, “Always pass on what you have learned.”

Guiding Principles

If you are considering contributing content to TLU, please be aware of our guiding principles:

- The topic researched should be potentially relevant to the Tari protocol; chat to us on #tari-research on IRC if you're not sure.

- The topic should be thoroughly researched.

- A critical approach should be taken (in the academic sense), with critiques and commentaries sought out and presented alongside the main topic. Remember that every white paper promises the world, so go and look for counterclaims.

- A recommendation/conclusion section should be included, providing a critical analysis on whether or not the technology/ proposal would be useful to the Tari protocol.

- The work presented should be easy to read and understand, distilling complex topics into a form that is accessible to a technical but non-expert audience. Use your own voice.

Submission Process

This is the basic submission process we follow within TLU. We would appreciate it if you, as an external contributor, follow the same process.

- Get some agreement from the community that the topic is of interest.

- Write up your report.

- Push a first draft of your report as a pull request.

- The community will peer-review the report, much the same as we would with a code pull request.

- The report is merged into the master.

- Receive the fame and acclaim that is due.

Learning Paths

With a field that is rapidly developing it is very important to stay updated with the latest trends, protocols and products that have incorporated this technology.

List of resources available to garner your knowledge in the Blockchain and cryptocurrency Domains is presented in the following paths:

| Learning Path | Description | |

|---|---|---|

| 1 | Blockchain Basics | Blockchain Basics provides a broad and easily understood explanation of blockchain technology, as well as the history of value itself. Having an understanding of the value is especially important in grasping how blockchain and cryptocurrencies have the potential to reshape the financial industry. |

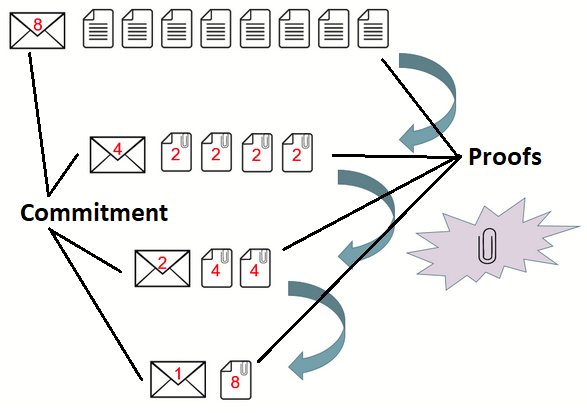

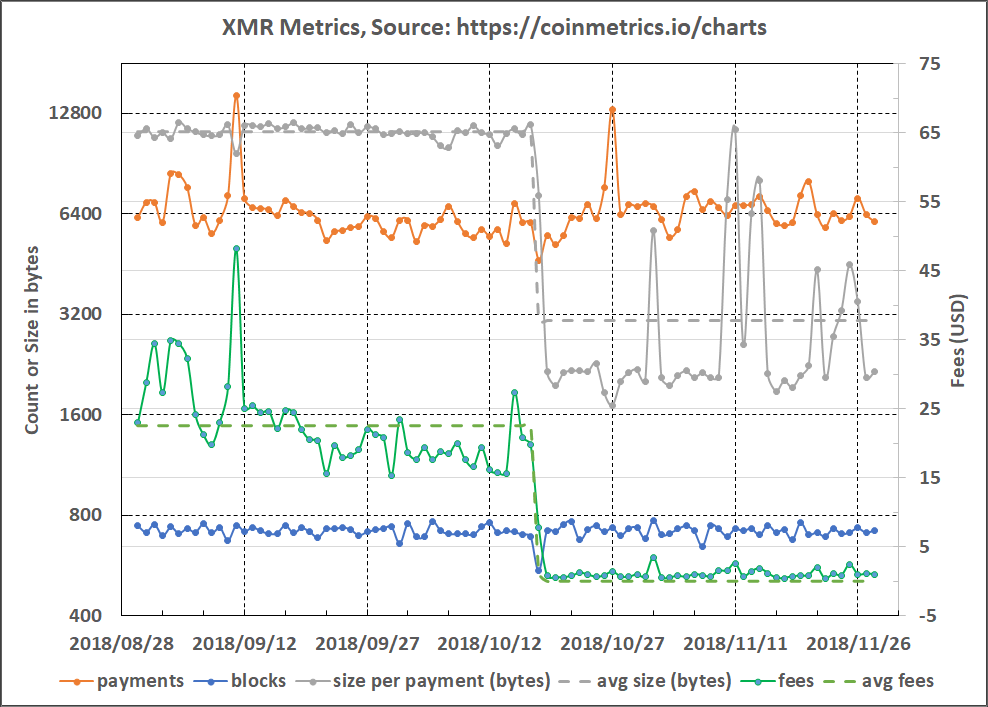

| 2 | Mimblewimble Implementation | Mimblewimble is a blockchain protocol that focuses on privacy through the implementation of confidential transactions. It enables a greatly simplified blockchain in which all spent transactions can be pruned, resulting in a much smaller blockchain footprint and efficient base node validation. The blockchain consists only of block-headers, remaining Unspent Transaction Outputs (UTXO) with their range proofs and an unprunable transaction kernel per transaction. |

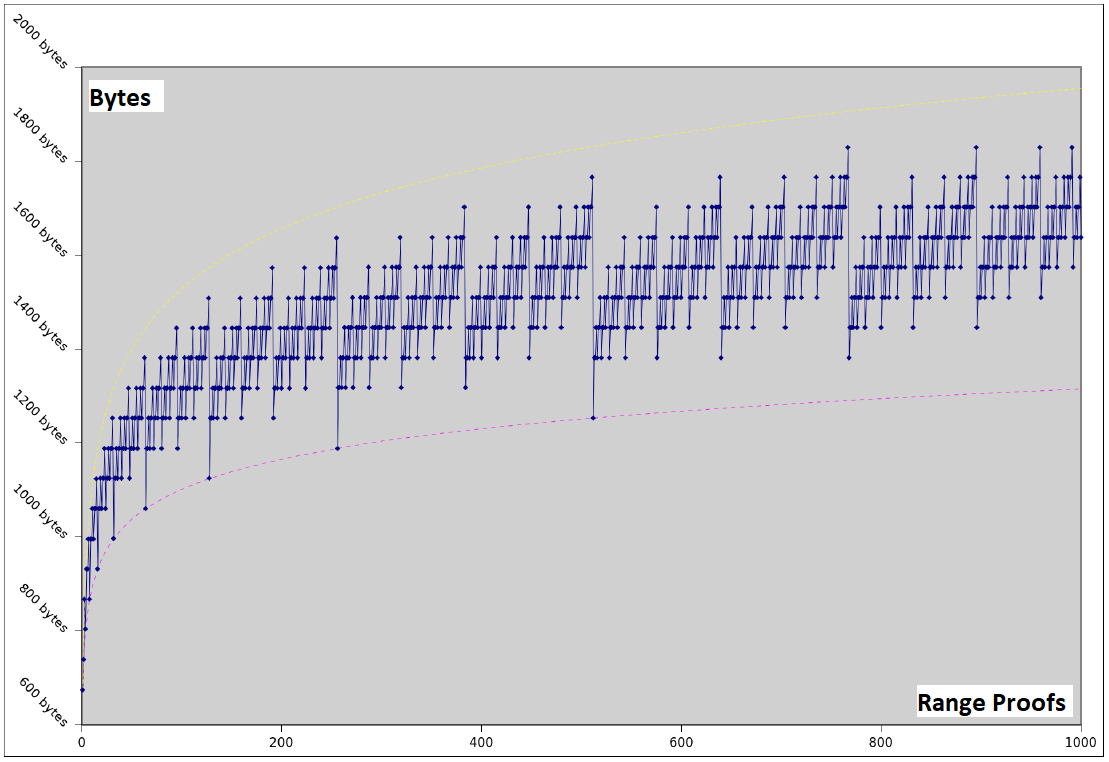

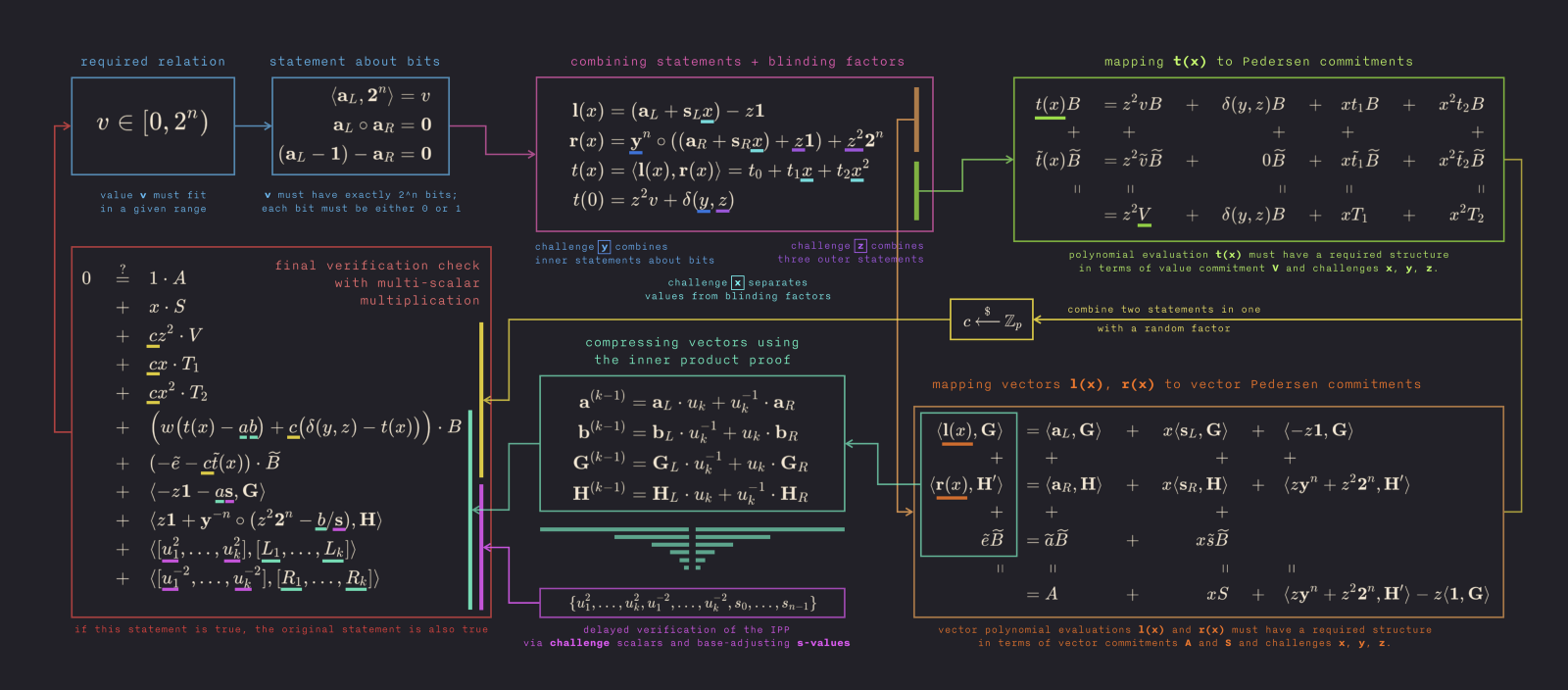

| 3 | Bulletproofs | Bulletproofs are short non-interactive zero-knowledge proofs that require no trusted setup. A bulletproof can be used to convince a verifier that an encrypted plaintext is well formed. For example, prove that an encrypted number is in a given range, without revealing anything else about the number. |

Key

| Level | Description |

|---|---|

Beginner | These are easy to digest articles, reports and presentations |

Intermediate | Requires the foundational knowledge and basic understanding of the content |

Advanced | Prior knowledge and/or mathematics skills are essential to understand these information sources |

Blockchain Basics

Background

Blockchain Basics provides a broad and easily understood explanation of blockchain technology, as well as the history of value itself. Having an understanding of the value is especially important in grasping how blockchain and cryptocurrencies have the potential to reshape the financial industry.

Learning Path Matrix

For learning purposes, we have arranged report, presentation and video topics in a matrix, in categories of difficulty, interest and format.

| Topics | Type |

|---|---|

| So you think you need a blockchain? Part I | |

| So you think you need a Blockchain, Part II? | |

| BFT Consensus Mechanisms | |

| Lightning Network for Dummies | |

| Atomic Swaps | |

| Layer 2 Scaling Survey | |

| Merged Mining Introduction | |

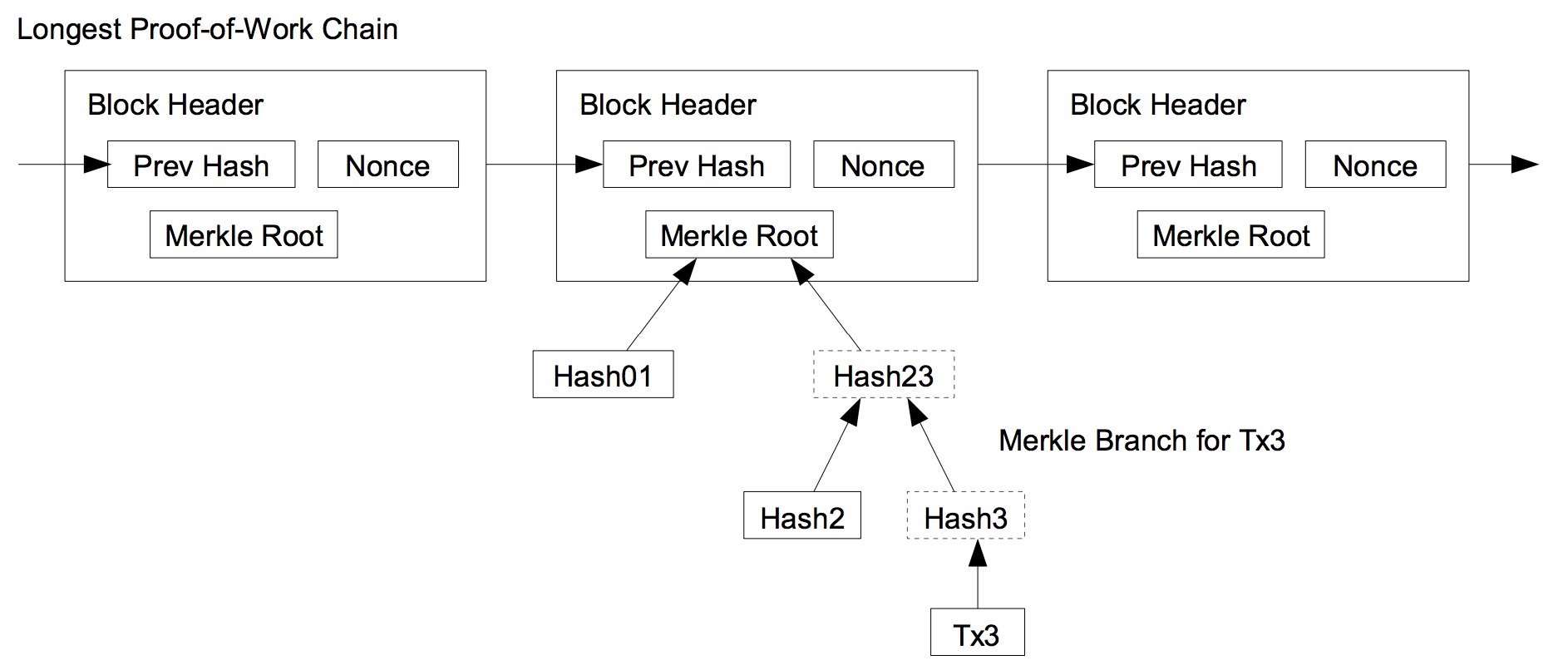

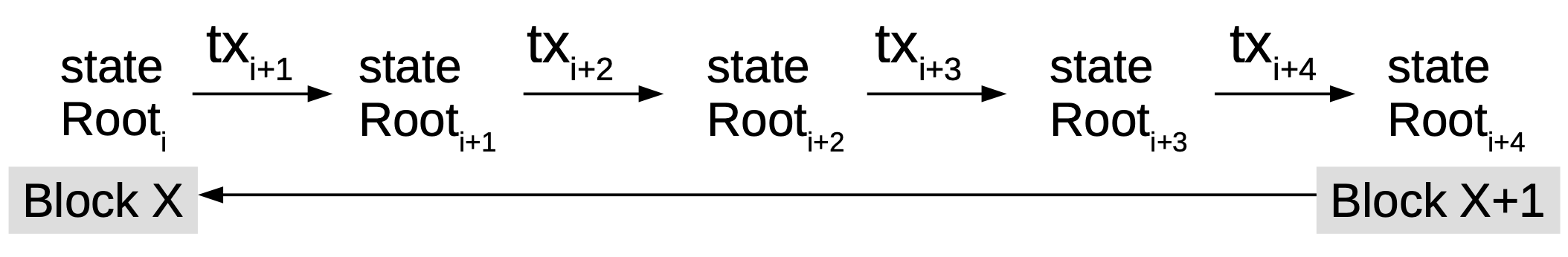

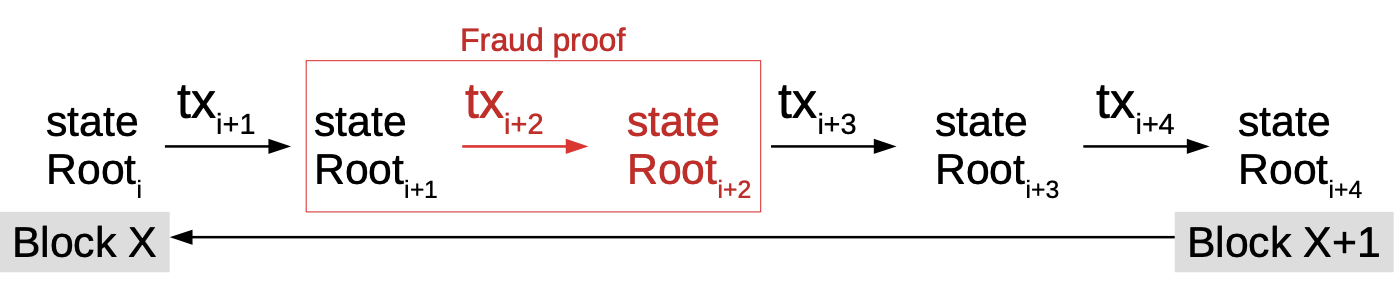

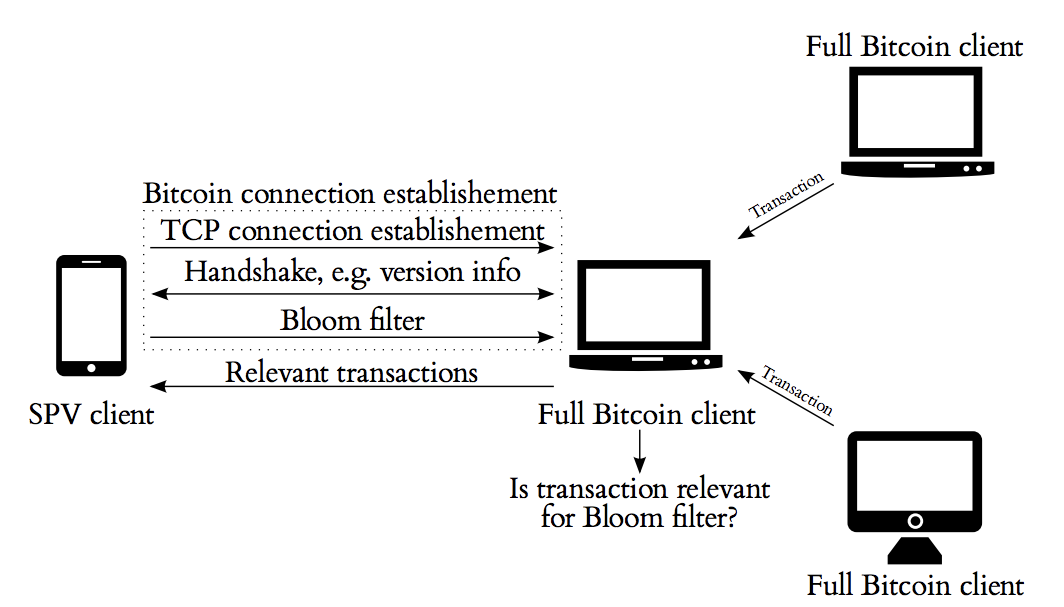

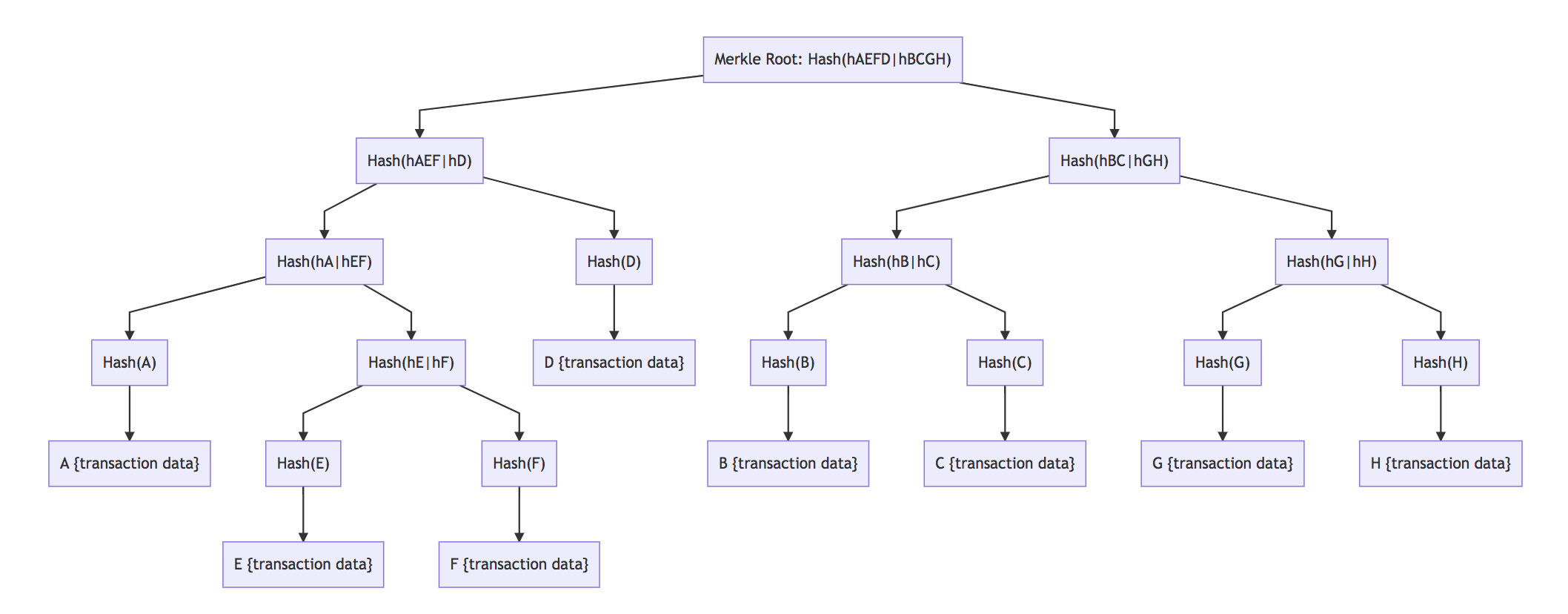

| Introduction to SPV, Merkle Trees and Bloom Filters | |

| Elliptic Curves 101 |

Mimblewimble Implementation

Background

Mimblewimble is a blockchain protocol that focuses on privacy through the implementation of confidential transactions. It enables a greatly simplified blockchain in which all spent transactions can be pruned, resulting in a much smaller blockchain footprint and efficient base node validation. The blockchain consists only of block-headers, remaining Unspent Transaction Outputs (UTXO) with their range proofs and an unprunable transaction kernel per transaction.

Learning Path Matrix

| Topics | Type |

|---|---|

| Mimblewimble | |

| Introduction to Schnorr Signatures | |

| Introduction to Scriptless Scripts | |

| Mimblewimble-Grin Block Chain Protocol Overview | |

| Grin vs. BEAM; a Comparison | |

| Grin Design Choice Criticisms - Truth or Fiction | |

| Mimblewimble Transactions Explained | |

| Mimblewimble Multiparty Bulletproof UTXO |

Bulletproofs

Background

Bulletproofs are short non-interactive zero-knowledge proofs that require no trusted setup. A bulletproof can be used to convince a verifier that an encrypted plaintext is well formed. For example, prove that an encrypted number is in a given range, without revealing anything else about the number.

Learning Path Matrix

| Topics | Type |

|---|---|

| Bulletproofs and Mimblewimble | |

| Building on Bulletproofs | |

| The Bulletproof Protocols | |

| Mimblewimble Multiparty Bulletproof UTXO |

Cryptography

Purpose

"The purpose of cryptography is to protect data transmitted in the likely presence of an adversary" [1].

Definitions

-

From Wikipedia: Cryptography or cryptology (from Ancient Greek - κρυπτός, kryptós "hidden, secret"; and γράφειν graphein, "to write", or -λογία -logia, "study", respectively) is the practice and study of techniques for secure communication in the presence of third parties called adversaries. More generally, cryptography is about constructing and analyzing protocols that prevent third parties or the public from reading private messages; various aspects in information security such as data confidentiality, data integrity, authentication, and non-repudiation are central to modern cryptography [2].

-

From SearchSecurity: Cryptography is a method of protecting information and communications through the use of codes so that only those for whom the information is intended can read and process it. The prefix "crypt" means "hidden" or "vault" and the suffix "graphy" stands for "writing" [3].

References

[1] L. Koved, A. Nadalin, N. Nagaratnam and M. Pistoia, "The Theory of Cryptography" [online], informIT. Available: http://www.informit.com/articles/article.aspx?p=170808. Date accessed: 2019‑06‑07.

[2] Wikipedia: "Cryptography" [online]. Available: https://en.wikipedia.org/wiki/Cryptography. Date accessed: 2019‑06‑07.

[3] SearchSecurity: "Cryptography". Available: https://searchsecurity.techtarget.com/definition/cryptography. Date accessed: 2019‑06‑07.

Elliptic curves 101

Having trouble viewing this presentation?

View it in a separate window.

Introduction to Schnorr Signatures

- Overview

- Let's get Started

- Basics of Schnorr Signatures

- Schnorr Signatures

- MuSig

- References

- Contributors

Overview

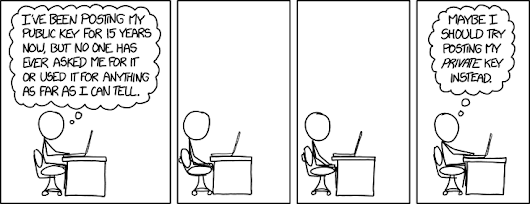

Private-public key pairs are the cornerstone of much of the cryptographic security underlying everything from secure web browsing to banking to cryptocurrencies. Private-public key pairs are asymmetric. This means that given one of the numbers (the private key), it's possible to derive the other one (the public key). However, doing the reverse is not feasible. It's this asymmetry that allows one to share the public key, uh, publicly and be confident that no one can figure out our private key (which we keep very secret and secure).

Asymmetric key pairs are employed in two main applications:

- in authentication, where you prove that you have knowledge of the private key; and

- in encryption, where messages can be encoded and only the person possessing the private key can decrypt and read the message.

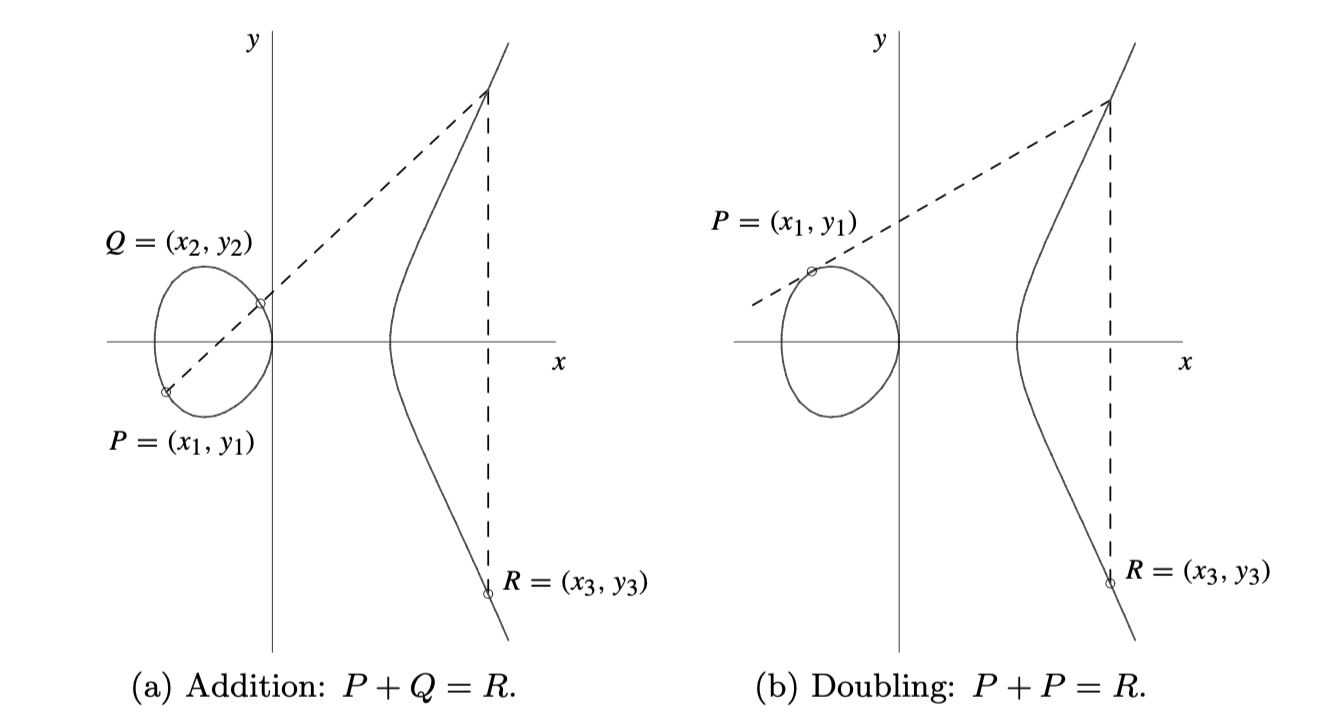

In this introduction to digital signatures, we'll be talking about a particular class of keys: those derived from elliptic curves. There are other asymmetric schemes, not least of which are those based on products of prime numbers, including RSA keys [1].

We're going to assume you know the basics of elliptic curve cryptography (ECC). If not, don't stress, there's a gentle introduction in a previous chapter.

Let's get Started

This is an interactive introduction to digital signatures. It uses Rust code to demonstrate some of the ideas presented here, so you can see them at work. The code for this introduction uses the libsecp256k-rs library.

That's a mouthful, but secp256k1 is the name of the elliptic curve that secures a lot of things in many cryptocurrencies' transactions, including Bitcoin.

This particular library has some nice features. We've overridden the + (addition) and * (multiplication)

operators so that the Rust code looks a lot more like mathematical formulae. This makes it much easier

to play with the ideas we'll be exploring.

WARNING! Don't use this library in production code. It hasn't been battle-hardened, so use this one in production instead.

Basics of Schnorr Signatures

Public and Private Keys

The first thing we'll do is create a public and private key from an elliptic curve.

On secp256k1, a private key is simply a scalar integer value between 0 and ~2256. That's roughly how many atoms there are in the universe, so we have a big sandbox to play in.

We have a special point on the secp256k1 curve called G, which acts as the "origin". A public key is calculated by adding G on the curve to itself, \( k_a \) times. This is the definition of multiplication by a scalar, and is written as:

$$ P_a = k_a G $$

Let's take an example from this post, where

it is known that the public key for 1, when written in uncompressed format, is 0479BE667...C47D08FFB10D4B8.

The following code snippet demonstrates this:

extern crate libsecp256k1_rs; use libsecp256k1_rs::{ SecretKey, PublicKey }; #[allow(non_snake_case)] fn main() { // Create the secret key "1" let k = SecretKey::from_hex("0000000000000000000000000000000000000000000000000000000000000001").unwrap(); // Generate the public key, P = k.G let pub_from_k = PublicKey::from_secret_key(&k); let known_pub = PublicKey::from_hex("0479BE667EF9DCBBAC55A06295CE870B07029BFCDB2DCE28D959F2815B16F81798483ADA7726A3C4655DA4FBFC0E1108A8FD17B448A68554199C47D08FFB10D4B8").unwrap(); // Compare it to the known value assert_eq!(pub_from_k, known_pub); println!("Ok") }

Creating a Signature

Approach Taken

Reversing ECC math multiplication (i.e. division) is pretty much infeasible when using properly chosen random values for your scalars ([5],[6]). This property is called the Discrete Log Problem, and is used as the principle behind many cryptography and digital signatures. A valid digital signature is evidence that the person providing the signature knows the private key corresponding to the public key with which the message is associated, or that they have solved the Discrete Log Problem.

The approach to creating signatures always follows this recipe:

- Generate a secret once-off number (called a nonce), $r$.

- Create a public key, $R$ from $r$ (where $R = r.G$).

- Send the following to Bob, your recipient - your message ($m$), $R$, and your public key ($P = k.G$).

The actual signature is created by hashing the combination of all the public information above to create a challenge, $e$:

$$ e = H(R || P || m) $$

The hashing function is chosen so that e has the same range as your private keys. In our case, we want something that returns a 256-bit number, so SHA256 is a good choice.

Now the signature is constructed using your private information:

$$ s = r + ke $$

Bob can now also calculate $e$, since he already knows $m, R, P$. But he doesn't know your private key, or nonce.

Note: When you construct the signature like this, it's known as a Schnorr signature, which is discussed in a following section. There are other ways of constructing $s$, such as ECDSA [2], which is used in Bitcoin.

But see this:

$$ sG = (r + ke)G $$

Multiply out the right-hand side:

$$ sG = rG + (kG)e $$

Substitute \(R = rG \) and \(P = kG \) and we have: $$ sG = R + Pe $$

So Bob must just calculate the public key corresponding to the signature $\text{(}s.G\text{)}$ and check that it equals the right-hand side of the last equation above $\text{(}R + P.e\text{)}$, all of which Bob already knows.

Why do we Need the Nonce?

Why do we need a nonce in the standard signature?

Let's say we naïvely sign a message $m$ with

$$ e = H(P || m) $$

and then the signature would be \(s = ek \).

Now as before, we can check that the signature is valid:

$$ \begin{align} sG &= ekG \\ &= e(kG) = eP \end{align} $$

So far so good. But anyone can read your private key now because $s$ is a scalar, so \(k = {s}/{e} \) is not hard to do. With the nonce you have to solve \( k = (s - r)/e \), but $r$ is unknown, so this is not a feasible calculation as long as $r$ has been chosen randomly.

We can show that leaving off the nonce is indeed highly insecure:

extern crate libsecp256k1_rs as secp256k1; use secp256k1::{SecretKey, PublicKey, thread_rng, Message}; use secp256k1::schnorr::{ Challenge}; #[allow(non_snake_case)] fn main() { // Create a random private key let mut rng = thread_rng(); let k = SecretKey::random(&mut rng); println!("My private key: {}", k); let P = PublicKey::from_secret_key(&k); let m = Message::hash(b"Meet me at 12").unwrap(); // Challenge, e = H(P || m) let e = Challenge::new(&[&P, &m]).as_scalar().unwrap(); // Signature let s = e * k; // Verify the signature assert_eq!(PublicKey::from_secret_key(&s), e*P); println!("Signature is valid!"); // But let's try calculate the private key from known information let hacked = s * e.inv(); assert_eq!(k, hacked); println!("Hacked key: {}", k) }

ECDH

How do parties that want to communicate securely generate a shared secret for encrypting messages? One way is called the Elliptic Curve Diffie-Hellman exchange (ECDH), which is a simple method for doing just this.

ECDH is used in many places, including the Lightning Network during channel negotiation [3].

Here's how it works. Alice and Bob want to communicate securely. A simple way to do this is to use each other's public keys and calculate

$$ \begin{align} S_a &= k_a P_b \tag{Alice} \\ S_b &= k_b P_a \tag{Bob} \\ \implies S_a = k_a k_b G &\equiv S_b = k_b k_a G \end{align} $$

extern crate libsecp256k1_rs as secp256k1; use secp256k1::{ SecretKey, PublicKey, thread_rng, Message }; #[allow(non_snake_case)] fn main() { let mut rng = thread_rng(); // Alice creates a public-private keypair let k_a = SecretKey::random(&mut rng); let P_a = PublicKey::from_secret_key(&k_a); // Bob creates a public-private keypair let k_b = SecretKey::random(&mut rng); let P_b = PublicKey::from_secret_key(&k_b); // They each calculate the shared secret based only on the other party's public information // Alice's version: let S_a = k_a * P_b; // Bob's version: let S_b = k_b * P_a; assert_eq!(S_a, S_b, "The shared secret is not the same!"); println!("The shared secret is identical") }

For security reasons, the private keys are usually chosen at random for each session (you'll see the term ephemeral keys being used), but then we have the problem of not being sure the other party is who they say they are (perhaps due to a man-in-the-middle attack [4]).

Various additional authentication steps can be employed to resolve this problem, which we won't get into here.

Schnorr Signatures

If you follow the crypto news, you'll know that that the new hotness in Bitcoin is Schnorr Signatures.

But in fact, they're old news! The Schnorr signature is considered the simplest digital signature scheme to be provably secure in a random oracle model. It is efficient and generates short signatures. It was covered by U.S. Patent 4,995,082, which expired in February 2008 [7].

So why all the Fuss?

What makes Schnorr signatures so interesting and potentially dangerous, is their simplicity. Schnorr signatures are linear, so you have some nice properties.

Elliptic curves have the multiplicative property. So if you have two scalars $x, y$ with corresponding points $X, Y$, the following holds:

$$ (x + y)G = xG + yG = X + Y $$

Schnorr signatures are of the form \( s = r + e.k \). This construction is linear too, so it fits nicely with the linearity of elliptic curve math.

You saw this property in a previous section, when we were verifying the signature. Schnorr signatures' linearity makes it very attractive for, among others:

- signature aggregation;

- atomic swaps;

- "scriptless" scripts.

Naïve Signature Aggregation

Let's see how the linearity property of Schnorr signatures can be used to construct a two-of-two multi-signature.

Alice and Bob want to cosign something (a Tari transaction, say) without having to trust each other; i.e. they need to be able to prove ownership of their respective keys, and the aggregate signature is only valid if both Alice and Bob provide their part of the signature.

Assuming private keys are denoted \( k_i \) and public keys \( P_i \). If we ask Alice and Bob to each supply a nonce, we can try:

$$ \begin{align} P_{agg} &= P_a + P_b \\ e &= H(R_a || R_b || P_a || P_b || m) \\ s_{agg} &= r_a + r_b + (k_a + k_b)e \\ &= (r_a + k_ae) + (r_b + k_ae) \\ &= s_a + s_b \end{align} $$

So it looks like Alice and Bob can supply their own $R$, and anyone can construct the two-of-two signature from the sum of the $Rs$ and public keys. This does work:

extern crate libsecp256k1_rs as secp256k1; use secp256k1::{SecretKey, PublicKey, thread_rng, Message}; use secp256k1::schnorr::{Schnorr, Challenge}; #[allow(non_snake_case)] fn main() { // Alice generates some keys let (ka, Pa, ra, Ra) = get_keyset(); // Bob generates some keys let (kb, Pb, rb, Rb) = get_keyset(); let m = Message::hash(b"a multisig transaction").unwrap(); // The challenge uses both nonce public keys and private keys // e = H(Ra || Rb || Pa || Pb || H(m)) let e = Challenge::new(&[&Ra, &Rb, &Pa, &Pb, &m]).as_scalar().unwrap(); // Alice calculates her signature let sa = ra + ka * e; // Bob calculates his signature let sb = rb + kb * e; // Calculate the aggregate signature let s_agg = sa + sb; // S = s_agg.G let S = PublicKey::from_secret_key(&s_agg); // This should equal Ra + Rb + e(Pa + Pb) assert_eq!(S, Ra + Rb + e*(Pa + Pb)); println!("The aggregate signature is valid!") } #[allow(non_snake_case)] fn get_keyset() -> (SecretKey, PublicKey, SecretKey, PublicKey) { let mut rng = thread_rng(); let k = SecretKey::random(&mut rng); let P = PublicKey::from_secret_key(&k); let r = SecretKey::random(&mut rng); let R = PublicKey::from_secret_key(&r); (k, P, r, R) }

But this scheme is not secure!

Key Cancellation Attack

Let's take the previous scenario again, but this time, Bob knows Alice's public key and nonce ahead of time, by waiting until she reveals them.

Now Bob lies and says that his public key is \( P_b' = P_b - P_a \) and public nonce is \( R_b' = R_b - R_a \).

Note that Bob doesn't know the private keys for these faked values, but that doesn't matter.

Everyone assumes that \(s_{agg} = R_a + R_b' + e(P_a + P_b') \) as per the aggregation scheme.

But Bob can create this signature himself:

$$ \begin{align} s_{agg}G &= R_a + R_b' + e(P_a + P_b') \\ &= R_a + (R_b - R_a) + e(P_a + P_b - P_a) \\ &= R_b + eP_b \\ &= r_bG + ek_bG \\ \therefore s_{agg} &= r_b + ek_b = s_b \end{align} $$

extern crate libsecp256k1_rs as secp256k1; use secp256k1::{SecretKey, PublicKey, thread_rng, Message}; use secp256k1::schnorr::{Schnorr, Challenge}; #[allow(non_snake_case)] fn main() { // Alice generates some keys let (ka, Pa, ra, Ra) = get_keyset(); // Bob generates some keys as before let (kb, Pb, rb, Rb) = get_keyset(); // ..and then publishes his forged keys let Pf = Pb - Pa; let Rf = Rb - Ra; let m = Message::hash(b"a multisig transaction").unwrap(); // The challenge uses both nonce public keys and private keys // e = H(Ra || Rb' || Pa || Pb' || H(m)) let e = Challenge::new(&[&Ra, &Rf, &Pa, &Pf, &m]).as_scalar().unwrap(); // Bob creates a forged signature let s_f = rb + e * kb; // Check if it's valid let sG = Ra + Rf + e*(Pa + Pf); assert_eq!(sG, PublicKey::from_secret_key(&s_f)); println!("Bob successfully forged the aggregate signature!") } #[allow(non_snake_case)] fn get_keyset() -> (SecretKey, PublicKey, SecretKey, PublicKey) { let mut rng = thread_rng(); let k = SecretKey::random(&mut rng); let P = PublicKey::from_secret_key(&k); let r = SecretKey::random(&mut rng); let R = PublicKey::from_secret_key(&r); (k, P, r, R) }

Better Approaches to Aggregation

In the Key Cancellation Attack, Bob didn't know the private keys for his published $R$ and $P$ values. We could defeat Bob by asking him to sign a message proving that he does know the private keys.

This works, but it requires another round of messaging between parties, which is not conducive to a great user experience.

A better approach would be one that incorporates one or more of the following features:

- It must be provably secure in the plain public-key model, without having to prove knowledge of secret keys, as we might have asked Bob to do in the naïve approach.

- It should satisfy the normal Schnorr equation, i.e. the resulting signature can be verified with an expression of the form \( R + e X \).

- It allows for Interactive Aggregate Signatures (IAS), where the signers are required to cooperate.

- It allows for Non-interactive Aggregate Signatures (NAS), where the aggregation can be done by anyone.

- It allows each signer to sign the same message, $m$.

- It allows each signer to sign their own message, \( m_i \).

MuSig

MuSig is a recently proposed ([8],[9]) simple signature aggregation scheme that satisfies all of the properties in the preceding section.

MuSig Demonstration

We'll demonstrate the interactive MuSig scheme here, where each signatory signs the same message. The scheme works as follows:

- Each signer has a public-private key pair, as before.

- Each signer shares a commitment to their public nonce (we'll skip this step in this demonstration). This step is necessary to prevent certain kinds of rogue key attacks [10].

- Each signer publishes the public key of their nonce, \( R_i \).

- Everyone calculates the same "shared public key", $X$ as follows:

$$ \begin{align} \ell &= H(X_1 || \dots || X_n) \\ a_i &= H(\ell || X_i) \\ X &= \sum a_i X_i \\ \end{align} $$

Note that in the preceding ordering of public keys, some deterministic convention should be used, such as the lexicographical order of the serialized keys.

- Everyone also calculates the shared nonce, \( R = \sum R_i \).

- The challenge, $e$ is \( H(R || X || m) \).

- Each signer provides their contribution to the signature as:

$$ s_i = r_i + k_i a_i e $$

Notice that the only departure here from a standard Schnorr signature is the inclusion of the factor \( a_i \).

The aggregate signature is the usual summation, \( s = \sum s_i \).

Verification is done by confirming that as usual:

$$ sG \equiv R + e X \ $$

Proof:

$$ \begin{align} sG &= \sum s_i G \\ &= \sum (r_i + k_i a_i e)G \\ &= \sum r_iG + k_iG a_i e \\ &= \sum R_i + X_i a_i e \\ &= \sum R_i + e \sum a_i X_i \\ &= R + e X \\ \blacksquare \end{align} $$

Let's demonstrate this using a three-of-three multisig:

extern crate libsecp256k1_rs as secp256k1; use secp256k1::{ SecretKey, PublicKey, thread_rng, Message }; use secp256k1::schnorr::{ Challenge }; #[allow(non_snake_case)] fn main() { let (k1, X1, r1, R1) = get_keys(); let (k2, X2, r2, R2) = get_keys(); let (k3, X3, r3, R3) = get_keys(); // I'm setting the order here. In general, they'll be sorted let l = Challenge::new(&[&X1, &X2, &X3]); // ai = H(l || p) let a1 = Challenge::new(&[ &l, &X1 ]).as_scalar().unwrap(); let a2 = Challenge::new(&[ &l, &X2 ]).as_scalar().unwrap(); let a3 = Challenge::new(&[ &l, &X3 ]).as_scalar().unwrap(); // X = sum( a_i X_i) let X = a1 * X1 + a2 * X2 + a3 * X3; let m = Message::hash(b"SomeSharedMultiSigTx").unwrap(); // Calc shared nonce let R = R1 + R2 + R3; // e = H(R || X || m) let e = Challenge::new(&[&R, &X, &m]).as_scalar().unwrap(); // Signatures let s1 = r1 + k1 * a1 * e; let s2 = r2 + k2 * a2 * e; let s3 = r3 + k3 * a3 * e; let s = s1 + s2 + s3; //Verify let sg = PublicKey::from_secret_key(&s); let check = R + e * X; assert_eq!(sg, check, "The signature is INVALID"); println!("The signature is correct!") } #[allow(non_snake_case)] fn get_keys() -> (SecretKey, PublicKey, SecretKey, PublicKey) { let mut rng = thread_rng(); let k = SecretKey::random(&mut rng); let P = PublicKey::from_secret_key(&k); let r = SecretKey::random(&mut rng); let R = PublicKey::from_secret_key(&r); (k, P, r, R) }

Security Demonstration

As a final demonstration, let's show how MuSig defeats the cancellation attack from the naïve signature scheme. Using the same idea as in the Key Cancellation Attack section, Bob has provided fake values for his nonce and public keys:

$$ \begin{align} R_f &= R_b - R_a \\ X_f &= X_b - X_a \\ \end{align} $$

This leads to both Alice and Bob calculating the following "shared" values:

$$ \begin{align} \ell &= H(X_a || X_f) \\ a_a &= H(\ell || X_a) \\ a_f &= H(\ell || X_f) \\ X &= a_a X_a + a_f X_f \\ R &= R_a + R_f (= R_b) \\ e &= H(R || X || m) \end{align} $$

Bob then tries to construct a unilateral signature following MuSig:

$$ s_b = r_b + k_s e $$

Let's assume for now that \( k_s \) doesn't need to be Bob's private key, but that he can derive it using information he knows. For this to be a valid signature, it must verify to \( R + eX \). So therefore:

$$ \begin{align} s_b G &= R + eX \\ (r_b + k_s e)G &= R_b + e(a_a X_a + a_f X_f) & \text{The first term looks good so far}\\ &= R_b + e(a_a X_a + a_f X_b - a_f X_a) \\ &= (r_b + e a_a k_a + e a_f k_b - e a_f k_a)G & \text{The r terms cancel as before} \\ k_s e &= e a_a k_a + e a_f k_b - e a_f k_a & \text{But nothing else is going away}\\ k_s &= a_a k_a + a_f k_b - a_f k_a \\ \end{align} $$

In the previous attack, Bob had all the information he needed on the right-hand side of the analogous calculation. In MuSig, Bob must somehow know Alice's private key and the faked private key (the terms don't cancel anymore) in order to create a unilateral signature, and so his cancellation attack is defeated.

Replay Attacks!

It's critical that a new nonce be chosen for every signing ceremony. The best way to do this is to make use of a cryptographically secure (pseudo-)random number generator (CSPRNG).

But even if this is the case, let's say an attacker can trick us into signing a new message by "rewinding" the signing ceremony to the point where partial signatures are generated. At this point, the attacker provides a different message, \( e' = H(...||m') \) to sign. Not suspecting any foul play, each party calculates their partial signature:

$$ s'_i = r_i + a_i k_i e' $$ However, the attacker still has access to the first set of signatures: \( s_i = r_i + a_i k_i e \). He now simply subtracts them:

$$ \begin{align} s'_i - s_i &= (r_i + a_i k_i e') - (r_i + a_i k_i e) \\ &= a_i k_i (e' - e) \\ \therefore k_i &= \frac{s'_i - s_i}{a_i(e' - e)} \end{align} $$

Everything on the right-hand side of the final equation is known by the attacker and thus he can trivially extract everybody's private key. It's difficult to protect against this kind of attack. One way to is make it difficult (or impossible) to stop and restart signing ceremonies. If a multi-sig ceremony gets interrupted, then you need to start from step one again. This is fairly unergonomic, but until a more robust solution comes along, it may be the best we have!

References

[1] Wikipedia: "RSA (Cryptosystem)" [online]. Available: https://en.wikipedia.org/wiki/RSA_(cryptosystem). Date accessed: 2018‑10‑11.

[2] Wikipedia: "Elliptic Curve Digital Signature Algorithm" [online]. Available: https://en.wikipedia.org/wiki/Elliptic_Curve_Digital_Signature_Algorithm. Date accessed: 2018‑10‑11.

[3] Github: "BOLT #8: Encrypted and Authenticated Transport, Lightning RFC" [online]. Available: https://github.com/lightningnetwork/lightning-rfc/blob/master/08-transport.md. Date accessed: 2018‑10‑11.

[4] Wikipedia: "Man in the Middle Attack" [online]. Available: https://en.wikipedia.org/wiki/Man-in-the-middle_attack. Date accessed: 2018‑10‑11.

[5] StackOverflow: "How does a Cryptographically Secure Random Number Generator Work?" [online]. Available: https://stackoverflow.com/questions/2449594/how-does-a-cryptographically-secure-random-number-generator-work. Date accessed: 2018‑10‑11.

[6] Wikipedia: "Cryptographically Secure Pseudorandom Number Generator" [online]. Available: https://en.wikipedia.org/wiki/Cryptographically_secure_pseudorandom_number_generator. Date accessed: 2018‑10‑11.

[7] Wikipedia: "Schnorr Signature" [online]. Available: https://en.wikipedia.org/wiki/Schnorr_signature. Date accessed: 2018‑09‑19.

[8] Blockstream: "Key Aggregation for Schnorr Signatures" [online]. Available: https://blockstream.com/2018/01/23/musig-key-aggregation-schnorr-signatures.html. Date accessed: 2018‑09‑19.

[9] G. Maxwell, A. Poelstra, Y. Seurin and P. Wuille, "Simple Schnorr Multi-signatures with Applications to Bitcoin" [online]. Available: https://eprint.iacr.org/2018/068.pdf. Date accessed: 2018‑09‑19.

[10] M. Drijvers, K. Edalatnejad, B. Ford, E. Kiltz, J. Loss, G. Neven and I. Stepanovs, "On the Security of Two-round Multi-signatures", Cryptology ePrint Archive, Report 2018/417 [online]. Available: https://eprint.iacr.org/2018/417.pdf. Date accessed: 2019‑02‑21.

Contributors

- https://github.com/CjS77

- https://github.com/SWvHeerden

- https://github.com/hansieodendaal

- https://github.com/neonknight64

- https://github.com/anselld

Introduction to Scriptless Scripts

- Definition of Scriptless Scripts

- Benefits of Scriptless Scripts

- List of Scriptless Scripts

- Role of Schnorr Signatures

- Schnorr Multi-signatures

- Adaptor Signatures

- Simultaneous Scriptless Scripts

- Atomic (Cross-chain Swaps) Example with Adaptor Signatures

- Zero Knowledge Contingent Payments

- Mimblewimble's Core Scriptless Script

- References

- Contributors

Definition of Scriptless Scripts

Scriptless Scripts are a means to execute smart contracts off-chain, through the use of Schnorr signatures [1].

The concept of Scriptless Scripts was born from Mimblewimble, which is a blockchain design that with the exception of kernels and their signatures, does not store permanent data. Fundamental properties of Mimblewimble include both privacy and scaling, both of which require the implementation of Scriptless Scripts [2].

A brief introduction is also given in Scriptless Scripts, Layer 2 Scaling Survey.

Benefits of Scriptless Scripts

The benefits of Scriptless Scripts are functionality, privacy and efficiency.

Functionality

With regard to functionality, Scriptless Scripts are said to increase the range and complexity of smart contracts.

Currently, as within Bitcoin Script, limitations stem from the number of OP_CODES that have been enabled by the

network. Scriptless Scripts move the specification and execution of smart contracts from the network to a discussion

that only involves the participants of the smart contract.

Privacy

With regard to privacy, moving the specification and execution of smart contracts from on-chain to off-chain increases privacy. When on-chain, many details of the smart contract are shared to the entire network. These details include the number and addresses of participants, and the amounts transferred. By moving smart contracts off-chain, the network only knows that the participants agree that the terms of their contract have been satisfied and that the transaction in question is valid.

Efficiency

With regard to efficiency, Scriptless Scripts minimize the amount of data that requires verification and storage on-chain. By moving smart contracts off-chain, there are fewer overheads for full nodes and lower transaction fees for users [1].

List of Scriptless Scripts

In this report, various forms of scripts will be covered, including [3]:

- Simultaneous Scriptless Scripts

- Adaptor Signatures

- Zero Knowledge Contingent Payments

Role of Schnorr Signatures

To begin with, the fundamentals of Schnorr signatures must be defined. The signer has a private key $x$ and random private nonce $r$. $G$ is the generator of a discrete log hard group, $R=rG$ is the public nonce and $P=xG$ is the public key [4].

The signature, $s$, for message $m$, can then be computed as a simple linear transaction

$$ s = r + e \cdot x $$

where

$$ e=H(P||R||m) \text{, } P=xG $$

The position on the line chosen is taken as the hash of all the data that one needs to commit to, the digital signature. The verification equation involves the multiplication of each of the terms in the equation by $G$ and takes into account the cryptographic assumption (discrete log) where $G$ can be multiplied in but not divided out, thus preventing deciphering.

$$ sG = rG + e \cdot xG $$

Elliptic Curve Digital Signature Algorithm (ECDSA) signatures (used in Bitcoin) are not linear in $x$ and $r$, and are thus less useful [2].

Schnorr Multi-signatures

A multi-signature (multisig) has multiple participants that produce a signature. Every participant might produce a separate signature and concatenate them, forming a multisig.

With Schnorr Signatures, one can have a single public key, which is the sum of many different people's public keys. The resulting key is one against which signatures will be verifiable [5].

The formulation of a multisig involves taking the sum of all components; thus all nonces and $s$ values result in the formulation of a multisig [4]: $$ s=\sum s(i) $$ It can therefore be seen that these signatures are essentially Scriptless Scripts. Independent public keys of several participants are joint to form a single key and signature, which, when published, do not divulge details of the number of participants involved or the original public keys.

Adaptor Signatures

This multisig protocol can be modified to produce an adaptor signature, which serves as the building block for all Scriptless Script functions [5].

Instead of functioning as a full valid signature on a message with a key, an adaptor signature is a promise that a signature agreed to be published, will reveal a secret.

This concept is similar to that of atomic swaps. However, no scripts are implemented. Since this is elliptic curve cryptography, there is only scalar multiplication of elliptic curve points. Fortunately, similar to a hash function, elliptic curves function in one way, so an elliptic curve point ($T$), can simply be shared and the secret will be its corresponding private key.

If two parties are considered, rather than providing their nonce $R$ in the multisig protocol, a blinding factor, taken as an elliptic curve point $T$, is conceived and sent in addition to $R$ (i.e. $R+T$). It can therefore be seen that $R$ is not blinded; it has instead been offset by the secret value $T$.

Here, the Schnorr multisig construction is modified such that the first party generates $$ T=tG, R=rG $$ where $t$ is the shared secret, $G$ is the generator of discrete log hard group and $r$ is the random nonce.

Using this information, the second party generates

$$

H(P||R+T||m)x

$$

where the coins to be swapped are contained within message $m$. The first party can now calculate the complete

signature $s$ such that

$$

s=r+t+H(P||R+T||m)x

$$

The first party then calculates and publishes the adaptor signature $s'$ to the second party (and anyone else listening)

$$

s'=s-t

$$

The second party can verify the adaptor signature $s'$ by asserting $s'G$

$$

s'G \overset{?}{=} R+H(P||R+T||m)P

$$

However, this is not a valid signature, as the hashed nonce point is $R+T$ and not $R$.

The second party cannot retrieve a valid signature from this and requires ECDLP solving to recover $s'+t$, which is virtually impossible.

After the first party broadcasts $s$ to claim the coins within message $m$, the second party can calculate the secret $t$ from $$ t=s-s' $$ The above is very general. However, by attaching auxiliary proofs too, an adaptor signature can be derived that will allow the translation of correct movement of the auxiliary protocol into a valid signature.

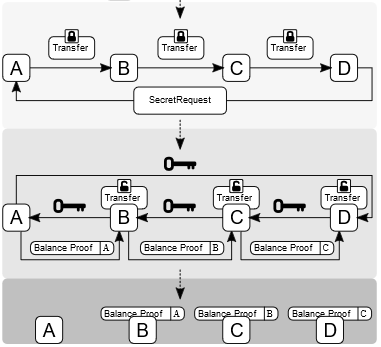

Simultaneous Scriptless Scripts

Preimages

The execution of separate transactions in an atomic fashion is achieved through preimages. If two transactions require the preimage to the same hash, once one is executed, the preimage is exposed so that the other one can be as well. Atomic swaps and Lightning channels use this construction [4].

Difference of Two Schnorr Signatures

If we consider the difference of two Schnorr signatures: $$ d=s-s'=k-k'+e \cdot x-e' \cdot x' $$ The above equation can be verified in a similar manner to that of a single Schnorr signature, by multiplying each term by $G$ and confirming algebraic correctness: $$ dG=kG-k'G+e \cdot xG-e' \cdot x'G $$ It must be noted that the difference $d$ is being verified, and not the Schnorr signature itself. $d$ functions as the translating key between two separate independent Schnorr signatures. Given $d$ and either $s$ or $s'$, the other can be computed. So possession of $d$ makes these two signatures atomic. This scheme does not link the two signatures or compromise their security.

For an atomic transaction, during the setup stage, someone provides the opposing party with the value $d$, and asserts it as the correct value. Once the transaction is signed, it can be adjusted to complete the other transaction. Atomicity is achieved, but can only be used by the person who possesses this $d$ value. Generally, the party that stands to lose money requires the $d$ value.

The $d$ value provides an interesting property with regard to atomicity. It is shared before signatures are public, which in turn allows the two transactions to be atomic once the transactions are published. By taking the difference of any two Schnorr signatures, one is able to construct transcripts, such as an atomic swap multisig contract.

This is a critical feature for Mimblewimble, which was previously thought to be unable to support atomic swaps or Lightning channels [4].

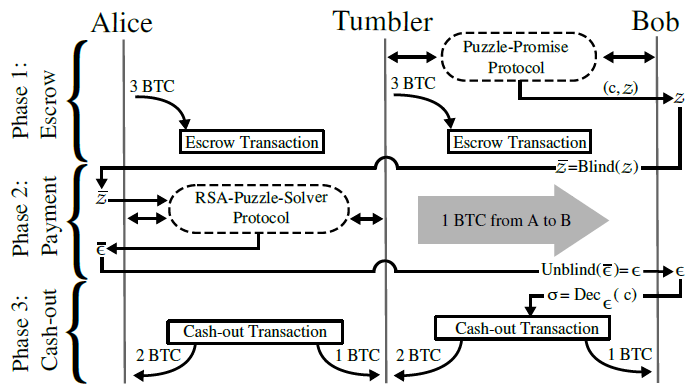

Atomic (Cross-chain Swaps) Example with Adaptor Signatures

Alice has a certain number of coins on a particular blockchain; Bob also has a certain number of coins on another blockchain. Alice and Bob want to engage in an atomic exchange. However, neither blockchain is aware of the other, nor are they able to verify each other's transactions.

The classical way of achieving this involves the use of the blockchain's script system to put a hash preimage challenge and then reveal the same preimage on both sides. Once Alice knows the preimage, she reveals it to take her coins. Bob then copies it off one chain to the other chain to take his coins.

Using adaptor signatures, the same result can be achieved through simpler means. In this case, both Alice and Bob put up their coins on two of two outputs on each blockchain. They sign the multisig protocols in parallel, where Bob then gives Alice the adaptor signatures for each side using the same value $T$ . This means that for Bob to take his coins, he needs to reveal $t$; and for Alice to take her coins, she needs to reveal $T$. Bob then replaces one of the signatures and publishes $t$, taking his coins. Alice computes $t$ from the final signature, visible on the blockchain, and uses that to reveal another signature, giving Alice her coins.

Thus it can be seen that atomicity is achieved. One is still able to exchange information, but now there are no explicit hashes or preimages on the blockchain. No script properties are necessary and privacy is achieved [4].

Zero Knowledge Contingent Payments

Zero Knowledge Contingent Payments (ZKCP) is a transaction protocol. This protocol allows a buyer to purchase information from a seller using coins in a manner that is private, scalable, secure and, importantly, in a trustless environment. The expected information is transferred only when payment is made. The buyer and seller do not need to trust each other or depend on arbitration by a third party [6].

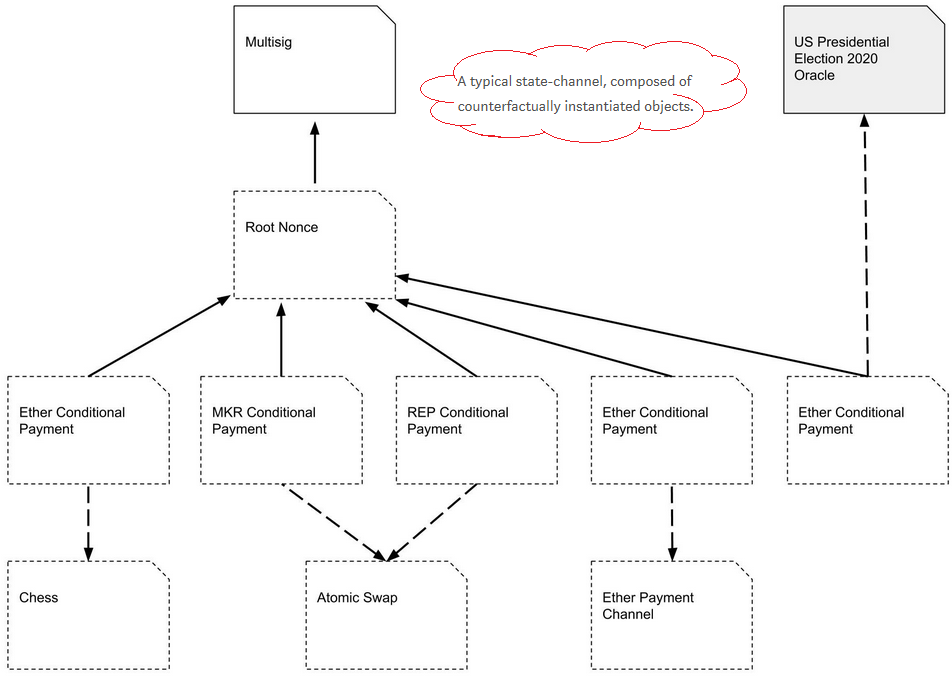

Mimblewimble's Core Scriptless Script

As previously stated, Mimblewimble is a blockchain design. Built similarly to Bitcoin, every transaction has inputs and outputs. Each input and output has a confidential transaction commitment. Confidential commitments have an interesting property where, in a valid balanced transaction, one can subtract the input from the output commitments, ensuring that all of the values of the Pedersen values balance out. Taking the difference of these inputs and outputs results in the multisig key of the owners of every output and every input in the transaction. This is referred to as the kernel.

Mimblewimble blocks will only have a list of new inputs, new outputs and signatures that are created from the aforementioned excess value [7].

Since the values are homomorphically encrypted, nodes can verify that no coins are being created or destroyed.

References

[1] "Crypto Innovation Spotlight 2: Scriptless Scripts" [online]. Available: https://medium.com/blockchain-capital/crypto-innovation-spotlight-2-scriptless-scripts-306c4eb6b3a8. Date accessed: 2018‑02‑27.

[2] A. Poelstra, "Mimblewimble and Scriptless Scripts". Presented at Real World Crypto, 2018 [online]. Available: https://www.youtube.com/watch?v=ovCBT1gyk9c&t=0s. Date accessed: 2018‑01‑11.

[3] A. Poelstra, "Scriptless Scripts". Presented at Layer 2 Summit Hosted by MIT DCI and Fidelity Labs on 18 May 2018 [online]. Available: https://www.youtube.com/watch?v=jzoS0tPUAiQ&t=3h36m. Date Accessed: 2018‑05‑25.

[4] A. Poelstra, "Mimblewimble and Scriptless Scripts". Presented at MIT Bitcoin Expo 2017 Day 1 [online]. Available: https://www.youtube.com/watch?v=0mVOq1jaR1U&feature=youtu.be&t=39m20. Date accessed: 2017‑03‑04.

[5] "Flipping the Scriptless Script on Schnorr" [online]. Available: https://joinmarket.me/blog/blog/flipping-the-scriptless-script-on-schnorr/. Date accessed: November 2017.

[6] "The First Successful Zero-knowledge Contingent Payment" [online]. Available: https://bitcoincore.org/en/2016/02/26/zero-knowledge-contingent-payments-announcement/. Date accessed: 2016‑02‑26.

[7] "What is Mimblewimble?" [Online.] Available: https://www.cryptocompare.com/coins/guides/what-is-mimblewimble/. Date accessed: 2018‑06‑30.

Contributors

The MuSig Schnorr Signature Scheme

- Introduction

- Overview of Multi-signatures

- Formation of MuSig

- Conclusions, Observations and Recommendations

- References

- Contributors

Introduction

This report investigates Schnorr Multi-Signature Schemes (MuSig), which make use of key aggregation and are provably secure in the plain public-key model.

Signature aggregation involves mathematically combining several signatures into a single signature, without having to prove Knowledge of Secret Keys (KOSK). This is known as the plain public-key model, where the only requirement is that each potential signer has a public key. The KOSK scheme requires that users prove knowledge (or possession) of the secret key during public key registration with a certification authority. It is one way to generically prevent rogue-key attacks.

Multi-signatures are a form of technology used to add multiple participants to cryptocurrency transactions. A traditional multi-signature protocol allows a group of signers to produce a joint multi-signature on a common message.

Schnorr Signatures and their Attack Vectors

Schnorr signatures produce a smaller on-chain size, support faster validation and have better privacy. They natively allow for combining multiple signatures into one through aggregation, and they permit more complex spending policies.

Signature aggregation also has its challenges. This includes the rogue-key attack, where a participant steals funds using a specifically constructed key. Although this is easily solved for simple multi-signatures through an enrollment procedure that involves the keys signing themselves, supporting it across multiple inputs of a transaction requires plain public-key security, meaning there is no setup.

There is an additional attack, named the Russel attack, after Russel O'Connor, who discovered that for multiparty schemes, a party could claim ownership of someone else's private key and so spend the other outputs.

P. Wuille [1] addressed some of these issues and provided a solution that refines the Bellare-Neven (BN) scheme. He also discussed the performance improvements that were implemented for the scaler multiplication of the BN scheme and how they enable batch validation on the blockchain [2].

MuSig

Multi-signature protocols, introduced by [3], allow a group of signers (that individually possess their own private/ public key pair) to produce a single signature $ \sigma $ on a message $ m $. Verification of the given signature $ \sigma $ can be publicly performed, given the message and the set of public keys of all signers.

A simple way to change a standard signature scheme into a multi-signature scheme is to have each signer produce a stand-alone signature for $ m $ with their private key, and to then concatenate all individual signatures.

The transformation of a standard signature scheme into a multi-signature scheme needs to be useful and practical. The newly calculated multi-signature scheme must therefore produce signatures where the size is independent of the number of signers and similar to that of the original signature scheme [4].

A traditional multi-signature scheme is a combination of a signing and verification algorithm, where multiple signers (each with their own private/public key) jointly sign a single message, resulting in a combined signature. This can then be verified by anyone knowing the message and the public keys of the signers, where a trusted setup with KOSK is a requirement.

MuSig is a multi-signature scheme that is novel in combining:

- support for key aggregation; and

- security in the plain public-key model.

There are two versions of MuSig that are provably secure, and which differ based on the number of communication rounds:

- Three-round MuSig, which only relies on the Discrete Logarithm (DL) assumption, on which Elliptic Curve Digital Signature Algorithm (ECDSA) also relies.

- Two-round MuSig, which instead relies on the slightly stronger One-More Discrete Logarithm (OMDL) assumption.

Key Aggregation

The term key aggregation refers to multi-signatures that look like a single-key signature, but with respect to an aggregated public key that is a function of only the participants' public keys. Thus, verifiers do not require the knowledge of the original participants' public keys. They can just be given the aggregated key. In some use cases, this leads to better privacy and performance. Thus, MuSig is effectively a key aggregation scheme for Schnorr signatures.

To make the traditional approach more effective and without needing a trusted setup, a multi-signature scheme must provide sublinear signature aggregation, along with the following properties:

- It must be provably secure in the plain public-key model.

- It must satisfy the normal Schnorr equation, whereby the resulting signature can be written as a function of a combination of the public keys.

- It must allow for Interactive Aggregate Signatures (IAS), where the signers are required to cooperate.

- It must allow for Non-interactive Aggregate Signatures (NAS). where the aggregation can be done by anyone.

- It must allow each signer to sign the same message.

- It must allow each signer to sign their own message.

This is different to a normal multi-signature scheme, where one message is signed by all. MuSig potentially provides all of these properties.

Other multi-signature schemes that already exist, provide key aggregation for Schnorr signatures. However, they come with some limitations, such as needing to verify that participants actually have the private key corresponding to the pubic keys that they claim to have. Security in the plain public-key model means that no limitations exist. All that is needed from the participants is their public keys [1].

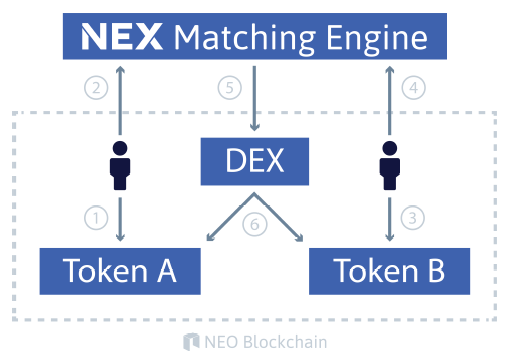

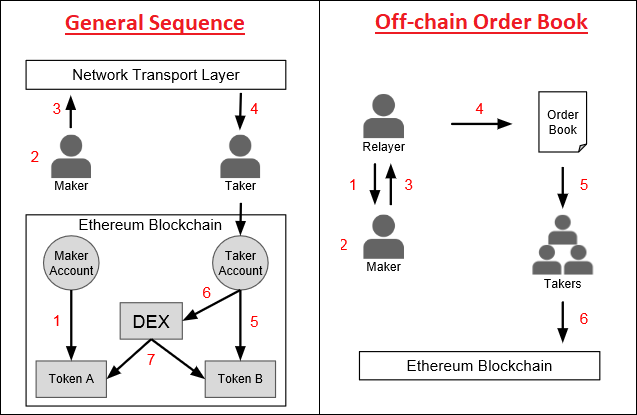

Overview of Multi-signatures

Recently, the most obvious use case for multi-signatures is with regard to Bitcoin, where it can function as a more efficient replacement of $ n-of-n $ multisig scripts (where the signatures required to spend and the signatures possible are equal in quantity) and other policies that permit a number of possible combinations of keys.

A key aggregation scheme also lets one reduce the number of public keys per input to one, as a user can send coins to the aggregate of all involved keys rather than including them all in the script. This leads to a smaller on-chain footprint, faster validation and better privacy.

Instead of creating restrictions with one signature per input, one signature can be used for the entire transaction. Traditionally, key aggregation cannot be used across multiple inputs, as the public keys are committed to by the outputs, and those can be spent independently. MuSig can be used here, with key aggregation done by the verifier.

No non-interactive aggregation scheme is known that only relies on the DL assumption, but interactive schemes are trivial to construct where a multi-signature scheme has every participant sign the concatenation of all messages. Reference [4], focused on key aggregation for Schnorr Signatures, showed that this is not always a desirable construction, and gave an IAS variant of BN with better properties instead [1].

Bitcoin $ m-of-n $ Multi-signatures

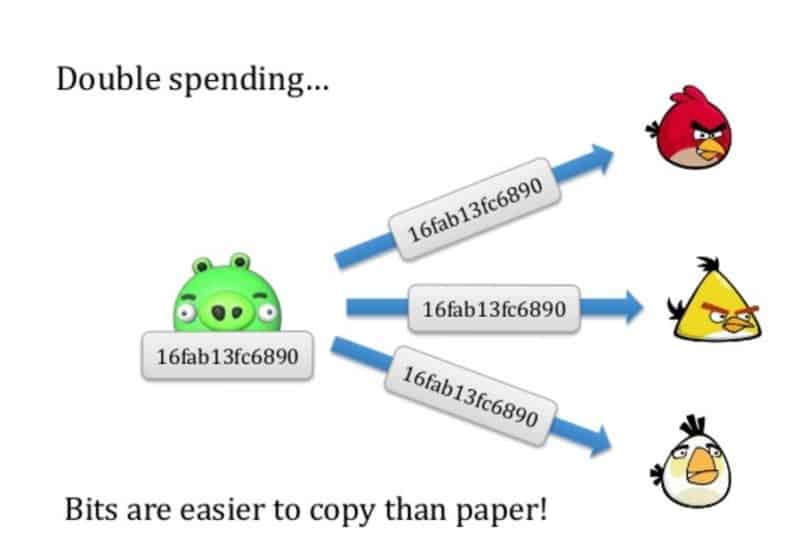

What are $ m-of-n $ Transactions?

Currently, standard transactions on the Bitcoin network can be referred to as single-signature transactions, as they require only one signature, from the owner of the private key associated with the Bitcoin address. However, the Bitcoin network supports far more complicated transactions, which can require the signatures of multiple people before the funds can be transferred. These are often referred to as $ m-of-n $ transactions, where m represents the number of signatures required to spend, while n represents the number of signatures possible [5].

Use Cases for $ m-of-n $ Multi-signatures

The use cases for $ m-of-n $ multi-signatures are as follows:

-

When $ m=1 $ and $ n>1 $, it is considered a shared wallet, which could be used for small group funds that do not require much security. This is the least secure multi-signature option, because it is not multifactor. Any compromised individual would jeopardize the entire group. Examples of use cases include funds for a weekend or evening event, or a shared wallet for some kind of game. Besides being convenient to spend from, the only benefit of this setup is that all but one of the backup/password pairs could be lost and all of the funds would be recoverable.

-

When $ m=n $, it is considered a partner wallet, which brings with it some nervousness, as no keys can be lost. As the number of signatures required increases, the risk also increases. This type of multi-signature can be considered to be a hard multifactor authentication.

-

When $ m<0.5n $, it is considered a buddy account, which could be used for spending from corporate group funds. The consequence for the colluding minority needs to be greater than possible benefits. It is considered less convenient than a shared wallet, but much more secure.

-

When $ m>0.5n $, it is referred to as a consensus account. The classic multi-signature wallet is a two of three, and is a special case of a consensus account. A two of three scheme has the best characteristics for creating new Bitcoin addresses, and for secure storing and spending. One compromised signatory does not compromise the funds. A single secret key can be lost and the funds can still be recovered. If done correctly, off-site backups are created during wallet setup. The way to recover funds is known by more than one party. The balance of power with a multi-signature wallet can be shifted by having one party control more keys than the other parties. If one party controls multiple keys, there is a greater risk of those keys not remaining as multiple factors.

-

When $ m=0.5n $, it is referred to as a split account. This is an interesting use case, as there would be three of six, where one person holds three keys and three people hold one key. In this way, one person could control their own money, but the funds could still be recoverable, even if the primary key holder were to disappear with all of their keys. As $ n $ increases, the level of trust in the secondary parties can decrease. A good use case might be a family savings account that would automatically become an inheritance account if the primary account holder were to die [5].

Rogue Attacks

Refer to Key cancellation attack demonstration in Introduction to Schnorr Signatures.

Rogue attacks are a significant concern when implementing multi-signature schemes. Here, a subset of corrupted signers manipulate the public keys computed as functions of the public keys of honest users, allowing them to easily produce forgeries for the set of public keys (despite them not knowing the associated secret keys).

Initial proposals from [10], [11], [12], [13], [14], [15] and [16] were thus undone before a formal model was put forward along with a provably secure scheme from [17]. Unfortunately, despite being provably secure, their scheme is costly and an impractical interactive key generation protocol [4].

A means of generically preventing rogue-key attacks is to make it mandatory for users to prove knowledge (or possession) of the secret key during public key registration with a certification authority [18]. Certification authority is a setting known as the KOSK assumption. The pairing-based multi-signature schemes described in [19] and [20] rely on the KOSK assumption in order to maintain security. However, according to [18] and [21], the cost of complexity and expense of the scheme, and the unrealistic and burdensome assumptions on the Public-key Infrastructure (PKI), have made this solution problematic.

As it stands, [21] provides one of the most practical multi-signature schemes, based on the Schnorr signature scheme, which is provably secure and that does not contain any assumption on the key setup. Since the only requirement of this scheme is that each potential signer has a public key, this setting is referred to as the plain-key model.

Reference [17] solves the rogue-key attack using a sophisticated interactive key generation protocol.

In [22], the number of rounds was reduced from three to two, using a homomorphic commitment scheme. Unfortunately, this increases the signature size and the computational cost of signing and verification.

Reference [23] proposed a signature scheme that involved the "double hashing" technique, which sees the reduction of the signature size compared to [22], while using only two rounds.

However, neither of these two variants allows for key aggregation.

Multi-signature schemes supporting key aggregation are easier to come by in the KOSK model. In particular, [24] proposed the CoSi scheme, which can be seen as the naive Schnorr multi-signature scheme, where the cosigners are organized in a tree structure for fast signature generation.

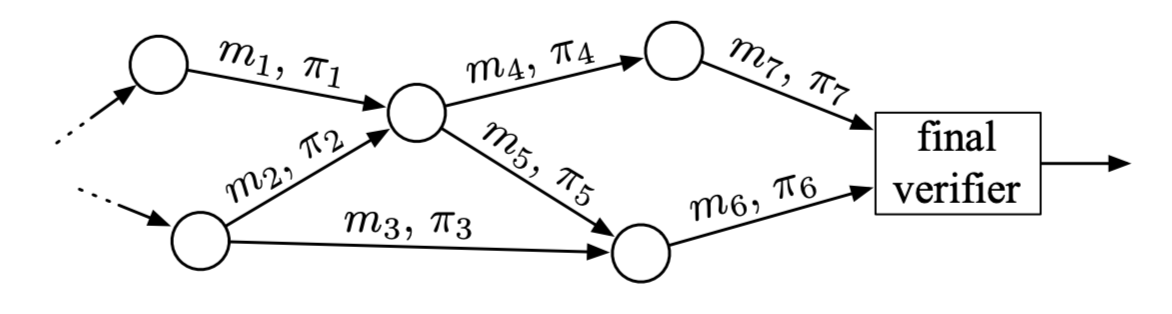

Interactive Aggregate Signatures

In some situations, it may be useful to allow each participant to sign a different message rather than a single common one. An IAS is one where each signer has their own message $ m_{i} $ to sign, and the joint signature proves that the $ i $ -th signer has signed $ m_{i} $. These schemes are considered to be more general than multi-signature schemes. However, they are not as flexible as non-interactive aggregate signatures ([25], [26]) and sequential aggregate signatures [27].

According to [21], a generic way of turning any multi-signature scheme into an IAS scheme, is for the signer running the multi-signature protocol to use the tuple of all public keys/message pairs involved in the IAS protocol, as the message.

For BN's scheme and Schnorr multi-signatures, this does not increase the number of communication rounds, as messages can be sent together with shares $ R_{i} $.

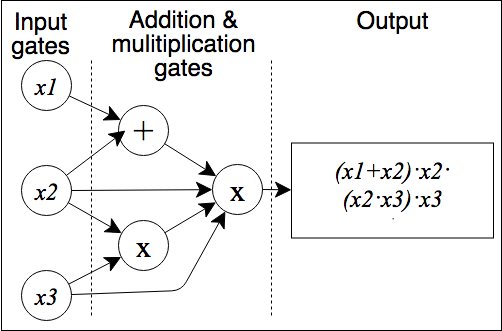

Applications of Interactive Aggregate Signatures

With regard to digital currency schemes, where all participants have the ability to validate transactions, these transactions consist of outputs (which have a verification key and amount) and inputs (which are references to outputs of earlier transactions). Each input contains a signature of a modified version of the transaction to be validated with its referenced output's key. Some outputs may require multiple signatures to be spent. Transactions spending such an output are referred to as m-of-n multi-signature transactions [28], and the current implementation corresponds to the trivial way of building a multi-signature scheme by concatenating individual signatures. Additionally, a threshold policy can be enforced where only $ m $ valid signatures out of the $ n $ possible ones are needed to redeem the transaction. (Again, this is the most straightforward way to turn a multi-signature scheme into some kind of basic threshold signature scheme.)

While several multi-signature schemes could offer an improvement over the currently available method, two properties increase the possible impact:

- The availability of key aggregation removes the need for verifiers to see all the involved keys, improving bandwidth, privacy and validation cost.

- Security under the plain public-key model enables multi-signatures across multiple inputs of a transaction, where the choice of signers cannot be committed to in advance. This greatly increases the number of situations in which multi-signatures are beneficial.

Native Multi-signature Support

An improvement is to replace the need for implementing $ n-of-n $ multi-signatures with a constant-size, multi-signature primitive such as BN. While this is an improvement in terms of size, it still needs to contain all of the signers' public keys. Key aggregation improves upon this further, as a single-key predicate can be used instead. This is smaller and has lower computational cost for verification. Predicate encryption is an encryption paradigm that gives a master secret key owner fine-grained control over access to encrypted data [29]. It also improves privacy, as the participant keys and their count remain private to the signers.

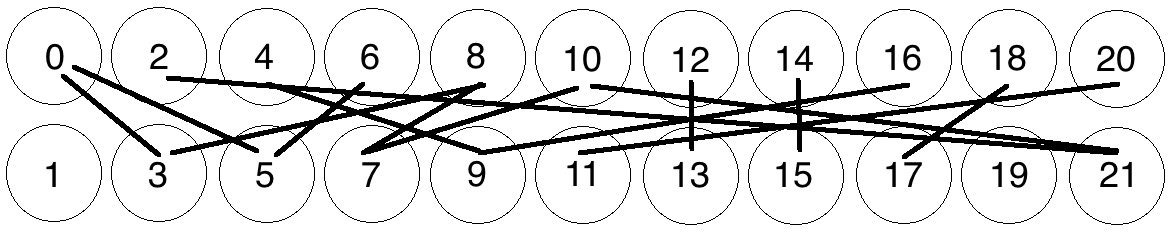

When generalizing to the $ m-of-n $ scenario, several options exist. One is to forego key aggregation, and still include all potential signer keys in the predicates, while still only producing a single signature for the chosen combination of keys. Alternatively, a Merkle tree [30] where the leaves are permitted combinations of public keys (in aggregated form), can be employed. The predicate in this case would take as input an aggregated public key, a signature and a proof. Its validity would depend on the signature being valid with the provided key, and the proof establishing that the key is in fact one of the leaves of the Merkle tree, identified by its root hash. This approach is very generic, as it works for any subset of combinations of keys, and as a result has good privacy, as the exact policy is not visible from the proof.

Some key aggregation schemes that do not protect against rogue-key attacks can be used instead in the above cases, under the assumption that the sender is given a proof of knowledge/possession for the receivers' private keys. However, these schemes are difficult to prove secure, except by using very large proofs of knowledge. As those proofs of knowledge/possession do not need to be seen by verifiers, they are effectively certified by the sender's validation. However, passing them around to senders is inconvenient, and easy to get wrong. Using a scheme that is secure in the plain public-key model categorically avoids these concerns.

Another alternative is to use an algorithm whose key generation requires a trusted setup, e.g. in the KOSK model. While many of these schemes have been proven secure, they rely on mechanisms that are usually not implemented by certification authorities ([18], [19], [20]), [21]).

Cross-input Multi-signatures

The previous sections explained how the numbers of signatures per input can generally by reduced to one. However, it is possible to go further and replace this with a single signature per transaction. Doing so requires a fundamental change in validation semantics, as the validity of separate inputs is no longer independent. As a result, the outputs can no longer be modeled as predicates, where the secret key owner is given access to encrypted data. Instead, they are modeled as functions that return a Boolean (data type with only two possible values) plus a set of zero or more public keys.

Overall validity requires all returned Booleans to be True and a multi-signature of the transaction with $ L $ the

union of all returned keys.

With regard to Bitcoin, this can be implemented by providing an alternative to the signature checking opcode

OP_CHECKSIG and related opcodes in the Script language. Instead of returning the result of an actual ECDSA verification,

they always return True, but additionally add the public key with which the verification would have taken place to a

transaction-wide multi-set of keys. Finally, after all inputs are verified, a multi-signature present in the transaction

is verified against that multi-set. In case the transaction spends inputs from multiple owners, they will need to

collaborate to produce the multi-signature, or choose to only use the original opcodes. Adding these new opcodes is

possible in a backward-compatible way [4].

Protection against Rogue-key Attacks

In Bitcoin, when taking cross-input signatures into account, there is no published commitment to the set of signers, as each transaction input can independently spend an output that requires authorization from distinct participants. This functionality was not restricted, as it would then interfere with fungibility improvements such as CoinJoin [31]. Due to the lack of certification, security against rogue-key attacks is of great importance.

If it is assumed that transactions used a single multi-signature that was vulnerable to rogue-attacks, an attacker could identify an arbitrary number of outputs they want to steal, with the public keys $ X_{1},...,X_{n-t} $ and then use the rogue-key attack to determine $ X_{n-t+1},...,X_{n} $ such that they can sign for the aggregated key $ \tilde{X} $. They would then send a small amount of their own money to outputs with predicates corresponding to the keys $ X_{n-t+1},...,X_{n} $. Finally, they can create a transaction that spends all of the victims' coins together with the ones they have just created by forging a multi-signature for the whole transaction.

It can be seen that in the case of multi-signatures across inputs, theft can occur through the ability to forge a signature over a set of keys that includes at least one key which is not controlled by the attacker. According to the plain public-key model, this is considered a win for the attacker. This is in contrast to the single-input multi-signature case, where theft is only possible by forging a signature for the exact (aggregated) keys contained in an existing output. As a result, it is no longer possible to rely on proofs of knowledge/possession that are private to the signers.

Formation of MuSig

Notation Used

This section gives the general notation of mathematical expressions when specifically referenced. This information is important pre-knowledge for the remainder of the report.

- Let $ p $ be a large prime number.

- Let $ \mathbb{G} $ denote cyclic group of the prime order $ p $.

- Let $ \mathbb Z_p $ denote the ring of integer $ modulo \mspace{4mu} p $.

- Let a generator of $ \mathbb{G} $ be denoted by $ g $. Thus, there exists a number $ g \in\mathbb{G} $ such that $ \mathbb{G} = \lbrace 1, \mspace{3mu}g, \mspace{3mu}g^2,\mspace{3mu}g^3, ..., \mspace{3mu}g^{p-1} \rbrace $.

- Let $ \textrm{H} $ denote the hash function.

- Let $ S= \lbrace (X_{1}, m_{1}),..., (X_{n}, m_{n}) \rbrace $ be the multi-set of all public key/message pairs of all participants, where $ X_{1}=g^{x_{1}} $.

- Let $ \langle S \rangle $ denote a lexicographically encoding of the multi-set of public key/message pairs in $ S $.

- Let $ L= \lbrace X_{1}=g^{x_{1}},...,X_{n}=g^{x_{n}} \rbrace $ be the multi-set of all public keys.

- Let $ \langle L \rangle $ denote a lexicographically encoding of the multi-set of public keys $ L= \lbrace X_{1}...X_{n} \rbrace $.

- Let $ \textrm{H}_{com} $ denote the hash function in the commitment phase.

- Let $ \textrm{H}_{agg} $ denote the hash function used to compute the aggregated key.

- Let $ \textrm{H}_{sig} $ denote the hash function used to compute the signature.

- Let $ X_{1} $ and $ x_{1} $ be the public and private key of a specific signer.

- Let $ m $ be the message that will be signed.

- Let $ X_{2},...,X_{n} $ be the public keys of other cosigners.

Recap on Schnorr Signature Scheme

The Schnorr signature scheme [6] uses group parameters $(\mathbb{G\mathrm{,p,g)}}$ and a hash function $ \textrm{H} $.

A private/public key pair is a pair

$$ (x,X) \in \lbrace 0,...,p-1 \rbrace \mspace{6mu} \mathsf{x} \mspace{6mu} \mathbb{G} $$

where $ X=g^{x} $

To sign a message $ m $, the signer draws a random integer $ r \in Z_{p} $ and computes

$$ \begin{aligned} R &= g^{r} \\ c &= \textrm{H}(X,R,m) \\ s &= r+cx \end{aligned} $$

The signature is the pair $ (R,s) $, and its validity can be checked by verifying whether $$ g^{s} = RX^{c} $$

This scheme is referred to as the "key-prefixed" variant of the scheme, which sees the public key hashed together with $ R $ and $ m $ [7]. This variant was thought to have a better multi-user security bound than the classic variant [8]. However, in [9], the key-prefixing was seen as unnecessary to enable good multi-user security for Schnorr signatures.

For the development of the MuSig Schnorr-based multi-signature scheme [4], key-prefixing is a requirement for the security proof, despite not knowing the form of an attack. The rationale also follows the process in reality, as messages signed in Bitcoin always indirectly commit to the public key.

Design of Schnorr Multi-signature Scheme

The naive way to design a Schnorr multi-signature scheme would be as follows:

A group of $ n $ signers want to cosign a message $ m $. Each cosigner randomly generates and communicates to others a share

$$ R_i = g^{r_{i}} $$

Each of the cosigners then computes:

$$ R = \prod _{i=1}^{n} R_{i} \mspace{30mu} \mathrm{and} \mspace{30mu} c = \textrm{H} (\tilde{X},R,m) $$

where $$ \tilde{X} = \prod_{i=1}^{n}X_{i} $$

is the product of individual public. The partial signature is then given by

$$ s_{i} = r_{i}+cx_{i} $$

All partial signatures are then combined into a single signature $(R,s)$ where

$$ s = \displaystyle\sum_{i=1}^{n}s_i \mod p $$

The validity of a signature $ (R,s) $ on message $ m $ for public keys $ \lbrace X_{1},...X_{n} \rbrace $ is equivalent to

$$ g^{s} = R\tilde{X}^{c} $$

where

$$ \tilde{X} = \prod_{i=1}^{n} X_{i} \mspace{30mu} \mathrm{and} \mspace{30mu} c = \textrm{H}(\tilde{X},R,m) $$

Note that this is exactly the verification equation for a traditional key-prefixed Schnorr signature with respect to public key $ \tilde{X} $, a property termed key aggregation. However, these protocols are vulnerable to a rogue-key attack ([12], [14], [15] and [17]) where a corrupted signer sets its public key to

$$ X_{1}=g^{x_{1}} (\prod_{i=2}^{n} X_{i})^{-1} $$

allowing the signer to produce signatures for public keys $ \lbrace X_{1},...X_{n} \rbrace $ by themselves.

Bellare and Neven Signature Scheme

Bellare and Neven [21] proceeded differently in order to avoid any key setup. A group of $ n $ signers want to cosign a message $ m $. Their main idea is to have each cosigner use a distinct "challenge" when computing their partial signature

$$ s_{i} = r_{i}+c_{i}x_{i} $$

defined as

$$ c_{i} = \textrm{H}( \langle L \rangle , X_{i},R,m) $$

where

$$ R = \prod_{i=1}^{n}R_{i} $$

The equation to verify signature $ (R,s) $ on message $ m $ for the public keys $ L $ is

$$ g^s = R\prod_{i=1}^{n}X_{i}^{c_{i}} $$

A preliminary round is also added to the signature protocol, where each signer commits to its share $ R_i $ by sending $ t_i = \textrm{H}^\prime(R_i) $ to other cosigners first.

This stops any cosigner from setting $ R = \prod_{i=1}^{n}R_{i} $ to some maliciously chosen value, and also allows the reduction to simulate the signature oracle in the security proof.

Bellare and Neven [21] showed that this yields a multi-signature scheme provably secure in the plain public-key model under the Discrete Logarithm assumptions, modeling $ \textrm{H} $ and $ \textrm{H}^\prime $ as random oracles. However, this scheme does not allow key aggregation anymore, since the entire list of public keys is required for verification.

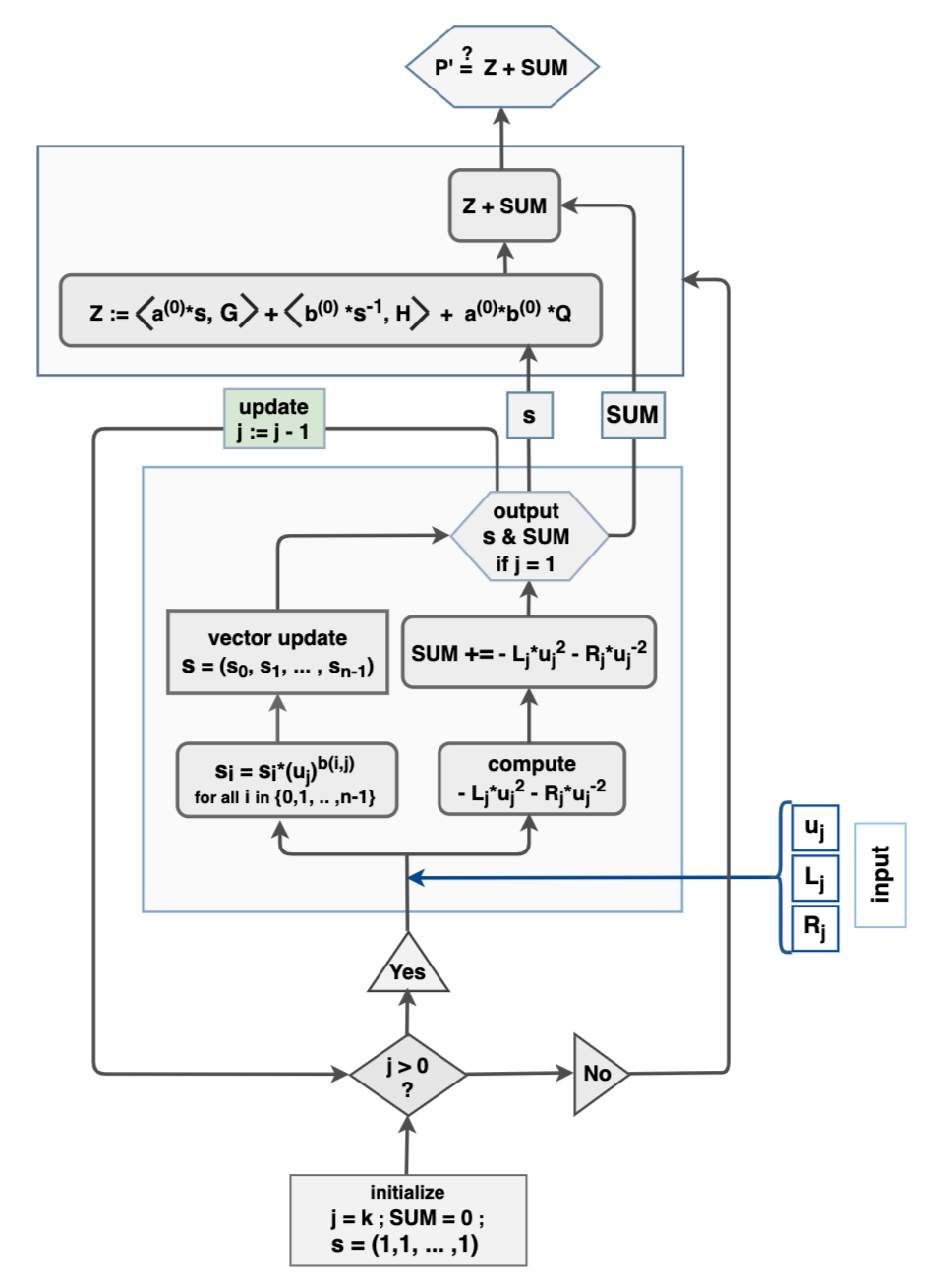

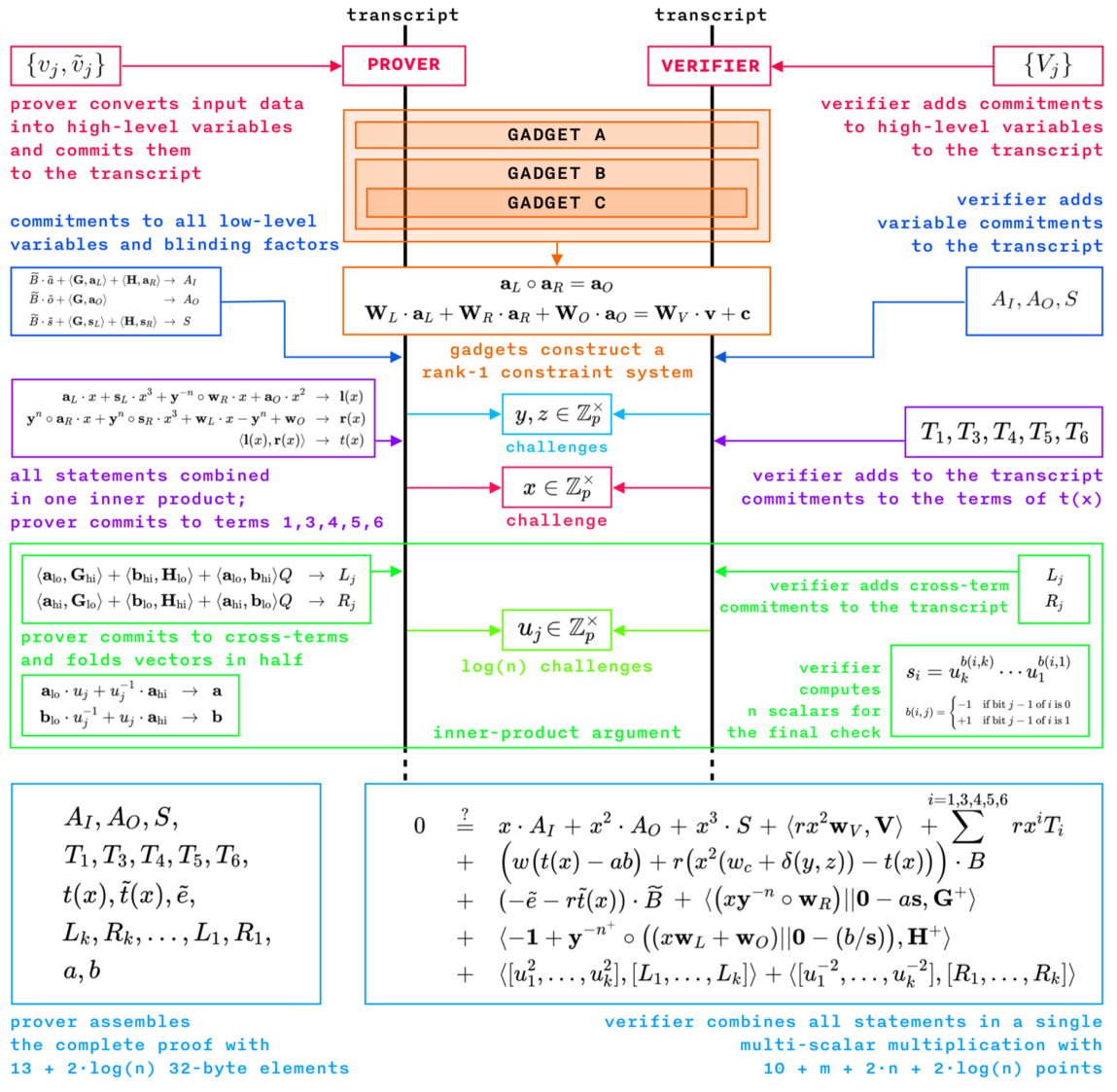

MuSig Signature Scheme

MuSig is paramaterized by group parameters $(\mathbb{G\mathrm{,p,g)}}$ and three hash functions $ ( \textrm{H}_{com} , \textrm{H}_{agg} , \textrm{H}_{sig} ) $ from $ \lbrace 0,1 \rbrace ^{*} $ to $ \lbrace 0,1 \rbrace ^{l} $ (constructed from a single hash, using proper domain separation).

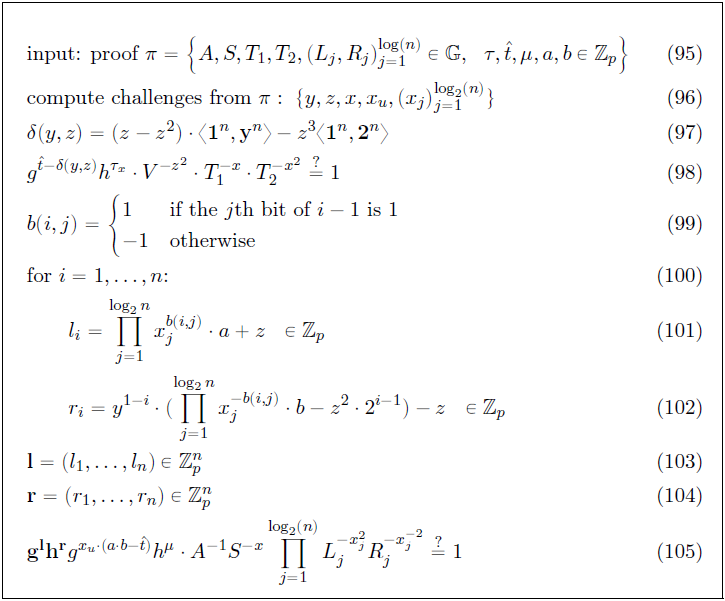

Round 1

A group of $ n $ signers want to cosign a message $ m $. Let $ X_1 $ and $ x_1 $ be the public and private key of a specific signer, let $ X_2 , . . . , X_n $ be the public keys of other cosigners and let $ \langle L \rangle $ be the multi-set of all public keys involved in the signing process.

For $ i\in \lbrace 1,...,n \rbrace $ , the signer computes the following

$$ a_{i} = \textrm{H}_{agg}(\langle L \rangle,X_{i}) $$

as well as the "aggregated" public key

$$ \tilde{X} = \prod_{i=1}^{n}X_{i}^{a_{i}} $$

Round 2

The signer generates a random private nonce $ r_{1}\leftarrow\mathbb{Z_{\mathrm{p}}} $, computes $ R_{1} = g^{r_{1}} $ (the public nonce) and commitment $ t_{1} = \textrm{H}_{com}(R_{1}) $ and sends $t_{1}$ to all other cosigners.

When receiving the commitments $t_{2},...,t_{n}$ from the other cosigners, the signer sends $R_{1}$ to all other cosigners. This ensures that the public nonce is not exposed until all commitments have been received.

Upon receiving $R_{2},...,R_{n}$ from other cosigners, the signer verifies that $t_{i}=\textrm{H}_{com}(R_{i})$ for all $ i\in \lbrace 2,...,n \rbrace $.

The protocol is aborted if this is not the case.

Round 3

If all commitment and random challenge pairs can be verified with $ \textrm{H}_{agg} $, the following is computed:

$$ \begin{aligned} R &= \prod^{n}_{i=1}R_{i} \\ c &= \textrm{H}_{sig} (\tilde{X},R,m) \\ s_{1} &= r_{1} + ca_{1} x_{1} \mod p \end{aligned} $$

Signature $s_{1}$ is sent to all other cosigners. When receiving $ s_{2},...s_{n} $ from other cosigners, the signer can compute $ s = \sum_{i=1}^{n}s_{i} \mod p$. The signature is $ \sigma = (R,s) $.

In order to verify the aggregated signature $ \sigma = (R,s) $, given a lexicographically encoded multi-set of public keys $ \langle L \rangle $ and message $ m $, the verifier computes:

$$ \begin{aligned} a_{i} &= \textrm{H}_{agg}(\langle L \rangle,X_{i}) \mspace{9mu} \textrm {for} \mspace{9mu} i \in \lbrace 1,...,n \rbrace \\ \tilde{X} &= \prod_{i=1}^{n}X_{i}^{a_{i}} \\ c &= \textrm{H}_{sig} (\tilde{X},R,m) \end{aligned} $$

then accepts the signature if

$$ g^{s} = R\prod_{i=1}^{n}X_{i}^{a_{i}c}=R\tilde{X^{c}.} $$

Revisions

In a previous version of [4], published on 15 January 2018, a two-round variant of MuSig was proposed, where the initial commitment round is omitted, claiming a security proof under the One More Discrete Logarithm (OMDL) assumptions ([32], [33]). The authors of [34] then discovered a flaw in the security proof and showed that through a meta-reduction, the initial multi-signature scheme cannot be proved secure using an algebraic black box reduction under the DL or OMDL assumption.

In more detail, it was observed that in the two-round variant of MuSig, an adversary (controlling public keys $ X_{2},...,X_{n} $) can impose the value of $ R=\Pi_{i=1}^{n}R_{i} $ used in signature protocols since they can choose $ R_{2},...,R_{n} $ after having received $ R_{1} $ from the honest signer (controlling public key $ X_{1}=g^{x_{1}} $ ). This prevents one from using the initial method of simulating the honest signer in the Random Oracle model without knowing $ x_{1} $ by randomly drawing $ s_{1} $ and $ c $, computing $ R_1=g^{s_1}(X_1)^{-a_1c}$, and later programming $ \textrm{H}_{sig}(\tilde{X}, R, m) \mspace{2mu} : = c_1 $ since the adversary might have made the random oracle query $ \textrm{H}_{sig}(\tilde{X}, R, m) $ before engaging the corresponding signature protocol.

Despite this, there is no attack currently known against the two-round variant of MuSig and it might be secure, although this is not provable under standard assumptions from existing techniques [4].

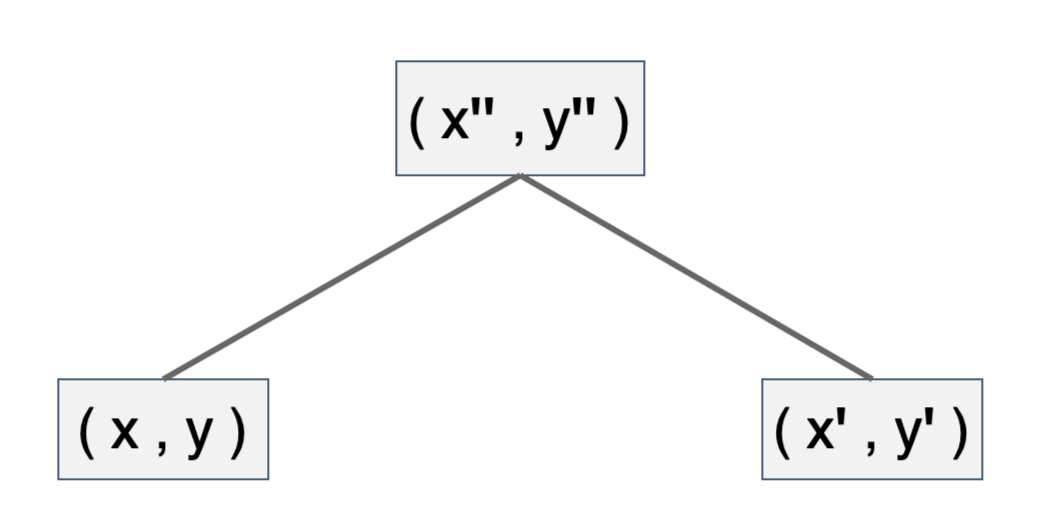

Turning Bellare and Neven’s Scheme into an IAS Scheme

In order to change the BN multi-signature scheme into an IAS scheme, [4] proposed the following scheme, which includes a fix to make the execution of the signing algorithm dependent on the message index.

If $ X = g^{x_i} $ is the public key of a specific signer and $ m $ the message they want to sign, and

$$ S^\prime = \lbrace (X^\prime_{1}, m^\prime_{1}),..., (X^\prime_{n-1}, m^\prime_{n-1}) \rbrace $$

is the set of the public key/message pairs of other signers, this specific signer merges $ (X, m) $ and $ S^\prime $ into the ordered set

$$ \langle S \rangle \mspace{6mu} \mathrm{of} \mspace{6mu} S = \lbrace (X_{1}, m_{1}),..., (X_{n}, m_{n}) \rbrace $$

and retrieves the resulting message index $ i $ such that

$$ (X_{1}, m_{i}) = (X, m) $$

Each signer then draws $ r_{1}\leftarrow\mathbb{Z_{\mathrm{p}}} $, computes $ R_{i} = g^{r_{i}} $ and subsequently sends commitment $ t_{i} = H^\prime(R_{i}) $ in a first round and then $ R_{i} $ in a second round, and then computes

$$ R = \prod_{i=1}^{n}R_{i} $$

The signer with message index $ i $ then computes:

$$ c_{i} = H(R, \langle S \rangle, i) \mspace{30mu} \\ s_{i} = r_{i} + c_{i}x_{i} \mod p $$

and then sends $ s_{i} $ to other signers. All signers can compute

$$ s = \displaystyle\sum_{i=1}^{n}s_{i} \mod p $$

The signature is $ \sigma = (R, s) $.

Given an ordered set $ \langle S \rangle \mspace{6mu} \mathrm{of} \mspace{6mu} S = \lbrace (X_{1}, m_{1}),...,(X_{n}, m_{n}) \rbrace $ and a signature $ \sigma = (R, s) $ then $ \sigma $ is valid for $ S $ when

$$ g^s = R\prod_{i=1}^{n}X_{i} ^{H(R, \langle S \rangle, i)} $$